n8n

n8n

n8n

Getting Started: n8n

This wiki provides a comprehensive guide to the n8n automation platform, covering everything from the user interface and basic node configuration to creating AI agents and advanced workflow efficiency techniques.

1. Introduction to Agentic AI with n8n

The Age of Agentic Automation

The last decade belonged to workflow automation. The next decade belongs to agentic automation, where systems can reason, retrieve information, and act with intelligence. Many organisations are moving rapidly to adopt AI agents, AI copilots, and retrieval-based intelligence, while others still depend on static documentation, spreadsheets, and manual interventions to support core audit, compliance, and operational processes.

The challenge today is not only about automating tasks. The real challenge is enabling teams to create intelligent agents that can read, interpret, compare, and act using the full context of internal knowledge. Teams must also ensure that these systems operate consistently, transparently, and safely.

What Is n8n

N8n is an open-source automation and integration platform that allows users to connect systems, orchestrate processes, and build intelligent workflows without requiring deep engineering expertise. At its core, n8n provides a visual, node-based environment where business logic, data movement, and automated decisions can be designed with clarity and transparency. What sets n8n apart from traditional automation tools is its hybrid flexibility. Users who prefer a purely visual approach can build complete workflows through drag and drop, while more technical professionals can extend these workflows with custom JavaScript, API calls, and modular components.

In the context of AI and agentic automation, n8n becomes even more powerful. It functions as the execution layer for agents, enabling them to use tools, call APIs, retrieve information, read documents, and trigger actions across an entire ecosystem. It also manages credentials, schedules, logging, and monitoring in a structured environment, which allows intelligent agents to operate safely and consistently within enterprise standards. Because n8n integrates seamlessly with language models and retrieval pipelines, it becomes a natural foundation for deploying RAG systems, enterprise knowledge assistants, and autonomous digital auditors. In practical terms, n8n provides both the intelligence layer and the operational backbone required to build systems that can think, retrieve, and act with precision.

Why n8n for Agents and RAG

N8n sits in a unique position between automation, data connectivity, and AI orchestration. Where standard AI tools can only generate text, n8n provides the structure needed for intelligence to connect with real-world actions. It allows you to build systems that can read, decide, and act across your ecosystem.

With n8n, you can build RAG systems, agents, and multi-step reasoning flows without deploying complex infrastructure or writing extensive code. Agents become useful because they can rely on n8n to perform tasks such as:

Calling Application Programming Interfaces (APIs)

Reading documents

Writing to databases

Triggering business processes

Executing conditional logic

Creating, retrieving, and analysing context

Storing and reusing knowledge

Applying safety and policy gates

This combination turns n8n into a practical foundation for enterprise-grade agentic automation.

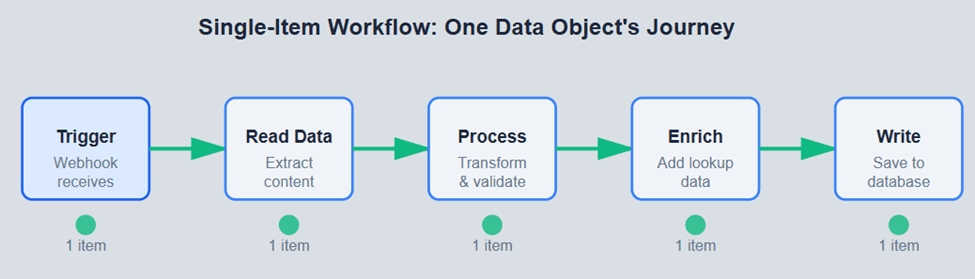

From Workflows to Agents

Traditional automation follows a fixed sequence. You decide every step in advance. Agents follow a different approach. They receive a goal, interpret what tools are available, decide what to do, and execute intermediate steps independently.

However, agents still require structure, governance, and real-world integration points. This is what n8n provides. It gives agents the tools to take action, along with the controls needed to ensure reliability, transparency, and traceability.

Why RAG Matters

AI agents are only as strong as the information they can understand. Retrieval Augmented Generation, or RAG, connects AI reasoning with your organisation’s internal policies, historical findings, evidence repositories, and operational documentation. This capability is transformative for audit, compliance, risk, operations, and financial oversight. RAG systems built with n8n can:

Retrieve relevant policy sections

Match evidence to control descriptions

Detect misalignments

Draft findings using real organisational context

Validate exceptions

Support continuous monitoring

Prepare and store audit evidence

Trigger alerts based on reasoning with cited sources

Instead of relying on memorised model knowledge, your AI systems reference the documents that matter.

Who This Guide Is For

This guide is designed for professionals who are ready to move beyond static automation and towards intelligent, self-improving systems. It is particularly relevant for internal auditors, external auditors, SOX and compliance teams, risk and financial control functions, and operational leaders responsible for maintaining oversight across complex environments. Automation specialists and AI transformation teams will also find this guide valuable, especially when seeking practical ways to deploy agentic systems within existing infrastructures.

Regardless of your background, the objective is the same. You want to automate evidence collection, reduce repetitive work, and create workflows capable of reasoning with organisational knowledge. Whether your goal is to build a simple retrieval assistant or a fully autonomous digital auditor, this guide provides the foundation to design agentic systems that operate with reliability and governance.

The Art of the Possible with n8n Agents

Combining agentic intelligence with n8n’s orchestration capabilities unlocks a range of new possibilities that extend far beyond traditional workflow automation. For example, an agent can interpret policy requirements, perform live control tests, and raise exceptions based on evidence retrieved from multiple systems. A RAG-enhanced assistant can assemble audit documentation automatically by extracting relevant wording from internal repositories and mapping it to evidence already collected.

Reconciliation agents can compare data from several sources and resolve differences without human intervention. Daily SOX evidence bots can run quietly in the background, preparing complete documentation packs long before a control owner requests them. Even governance-focused agents can validate business decisions before execution, ensuring alignment with internal standards.

These capabilities fundamentally reshape the relationship between human judgement and system automation. Instead of replacing human insight, n8n agents elevate it. Routine work is delegated to the system, while auditors and analysts focus on interpretation, investigation, and strategic oversight.

Building a Culture of Agentic Thinking

The purpose of this guide extends beyond teaching n8n’s technical features. It aims to help teams adopt a mindset built around intelligent systems that can reason and act on their behalf. This requires thinking in terms of goals rather than individual steps and structuring knowledge in a way that agents can access and retrieve with precision. It involves providing agents with safe and clearly defined tools, supported by guardrails that ensure consistent and policy-aligned outcomes.

Teams must also learn to create reusable automation components that support autonomy, rather than building narrowly scoped workflows that cannot adapt or expand. Finally, designing systems that learn from organisational knowledge is essential, ensuring that agents grow more capable as documentation, processes, and policies evolve. By embracing these principles, organisations can transition from manual, task-oriented work to intelligent, proactive systems that enhance every aspect of operational assurance and audit oversight.

As you move into the next chapters, you will learn how to design and govern AI agents and RAG systems using n8n. These capabilities will allow your team to modernise processes, reduce manual effort, and strengthen oversight through intelligent automation.

1. Introduction to Agentic AI with n8n

The Age of Agentic Automation

The last decade belonged to workflow automation. The next decade belongs to agentic automation, where systems can reason, retrieve information, and act with intelligence. Many organisations are moving rapidly to adopt AI agents, AI copilots, and retrieval-based intelligence, while others still depend on static documentation, spreadsheets, and manual interventions to support core audit, compliance, and operational processes.

The challenge today is not only about automating tasks. The real challenge is enabling teams to create intelligent agents that can read, interpret, compare, and act using the full context of internal knowledge. Teams must also ensure that these systems operate consistently, transparently, and safely.

What Is n8n

N8n is an open-source automation and integration platform that allows users to connect systems, orchestrate processes, and build intelligent workflows without requiring deep engineering expertise. At its core, n8n provides a visual, node-based environment where business logic, data movement, and automated decisions can be designed with clarity and transparency. What sets n8n apart from traditional automation tools is its hybrid flexibility. Users who prefer a purely visual approach can build complete workflows through drag and drop, while more technical professionals can extend these workflows with custom JavaScript, API calls, and modular components.

In the context of AI and agentic automation, n8n becomes even more powerful. It functions as the execution layer for agents, enabling them to use tools, call APIs, retrieve information, read documents, and trigger actions across an entire ecosystem. It also manages credentials, schedules, logging, and monitoring in a structured environment, which allows intelligent agents to operate safely and consistently within enterprise standards. Because n8n integrates seamlessly with language models and retrieval pipelines, it becomes a natural foundation for deploying RAG systems, enterprise knowledge assistants, and autonomous digital auditors. In practical terms, n8n provides both the intelligence layer and the operational backbone required to build systems that can think, retrieve, and act with precision.

Why n8n for Agents and RAG

N8n sits in a unique position between automation, data connectivity, and AI orchestration. Where standard AI tools can only generate text, n8n provides the structure needed for intelligence to connect with real-world actions. It allows you to build systems that can read, decide, and act across your ecosystem.

With n8n, you can build RAG systems, agents, and multi-step reasoning flows without deploying complex infrastructure or writing extensive code. Agents become useful because they can rely on n8n to perform tasks such as:

Calling Application Programming Interfaces (APIs)

Reading documents

Writing to databases

Triggering business processes

Executing conditional logic

Creating, retrieving, and analysing context

Storing and reusing knowledge

Applying safety and policy gates

This combination turns n8n into a practical foundation for enterprise-grade agentic automation.

From Workflows to Agents

Traditional automation follows a fixed sequence. You decide every step in advance. Agents follow a different approach. They receive a goal, interpret what tools are available, decide what to do, and execute intermediate steps independently.

However, agents still require structure, governance, and real-world integration points. This is what n8n provides. It gives agents the tools to take action, along with the controls needed to ensure reliability, transparency, and traceability.

Why RAG Matters

AI agents are only as strong as the information they can understand. Retrieval Augmented Generation, or RAG, connects AI reasoning with your organisation’s internal policies, historical findings, evidence repositories, and operational documentation. This capability is transformative for audit, compliance, risk, operations, and financial oversight. RAG systems built with n8n can:

Retrieve relevant policy sections

Match evidence to control descriptions

Detect misalignments

Draft findings using real organisational context

Validate exceptions

Support continuous monitoring

Prepare and store audit evidence

Trigger alerts based on reasoning with cited sources

Instead of relying on memorised model knowledge, your AI systems reference the documents that matter.

Who This Guide Is For

This guide is designed for professionals who are ready to move beyond static automation and towards intelligent, self-improving systems. It is particularly relevant for internal auditors, external auditors, SOX and compliance teams, risk and financial control functions, and operational leaders responsible for maintaining oversight across complex environments. Automation specialists and AI transformation teams will also find this guide valuable, especially when seeking practical ways to deploy agentic systems within existing infrastructures.

Regardless of your background, the objective is the same. You want to automate evidence collection, reduce repetitive work, and create workflows capable of reasoning with organisational knowledge. Whether your goal is to build a simple retrieval assistant or a fully autonomous digital auditor, this guide provides the foundation to design agentic systems that operate with reliability and governance.

The Art of the Possible with n8n Agents

Combining agentic intelligence with n8n’s orchestration capabilities unlocks a range of new possibilities that extend far beyond traditional workflow automation. For example, an agent can interpret policy requirements, perform live control tests, and raise exceptions based on evidence retrieved from multiple systems. A RAG-enhanced assistant can assemble audit documentation automatically by extracting relevant wording from internal repositories and mapping it to evidence already collected.

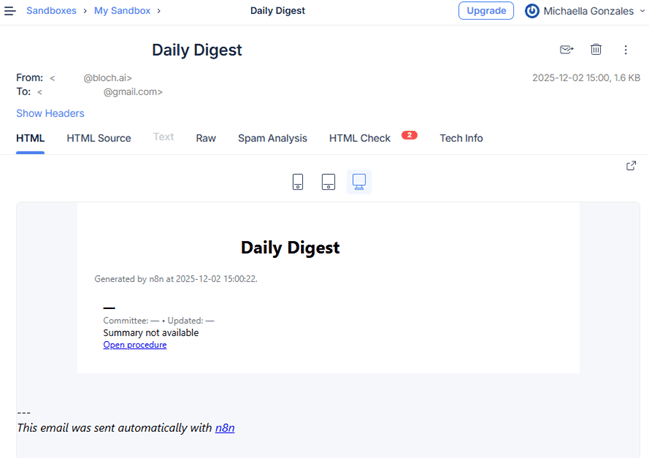

Reconciliation agents can compare data from several sources and resolve differences without human intervention. Daily SOX evidence bots can run quietly in the background, preparing complete documentation packs long before a control owner requests them. Even governance-focused agents can validate business decisions before execution, ensuring alignment with internal standards.

These capabilities fundamentally reshape the relationship between human judgement and system automation. Instead of replacing human insight, n8n agents elevate it. Routine work is delegated to the system, while auditors and analysts focus on interpretation, investigation, and strategic oversight.

Building a Culture of Agentic Thinking

The purpose of this guide extends beyond teaching n8n’s technical features. It aims to help teams adopt a mindset built around intelligent systems that can reason and act on their behalf. This requires thinking in terms of goals rather than individual steps and structuring knowledge in a way that agents can access and retrieve with precision. It involves providing agents with safe and clearly defined tools, supported by guardrails that ensure consistent and policy-aligned outcomes.

Teams must also learn to create reusable automation components that support autonomy, rather than building narrowly scoped workflows that cannot adapt or expand. Finally, designing systems that learn from organisational knowledge is essential, ensuring that agents grow more capable as documentation, processes, and policies evolve. By embracing these principles, organisations can transition from manual, task-oriented work to intelligent, proactive systems that enhance every aspect of operational assurance and audit oversight.

As you move into the next chapters, you will learn how to design and govern AI agents and RAG systems using n8n. These capabilities will allow your team to modernise processes, reduce manual effort, and strengthen oversight through intelligent automation.

1. Introduction to Agentic AI with n8n

The Age of Agentic Automation

The last decade belonged to workflow automation. The next decade belongs to agentic automation, where systems can reason, retrieve information, and act with intelligence. Many organisations are moving rapidly to adopt AI agents, AI copilots, and retrieval-based intelligence, while others still depend on static documentation, spreadsheets, and manual interventions to support core audit, compliance, and operational processes.

The challenge today is not only about automating tasks. The real challenge is enabling teams to create intelligent agents that can read, interpret, compare, and act using the full context of internal knowledge. Teams must also ensure that these systems operate consistently, transparently, and safely.

What Is n8n

N8n is an open-source automation and integration platform that allows users to connect systems, orchestrate processes, and build intelligent workflows without requiring deep engineering expertise. At its core, n8n provides a visual, node-based environment where business logic, data movement, and automated decisions can be designed with clarity and transparency. What sets n8n apart from traditional automation tools is its hybrid flexibility. Users who prefer a purely visual approach can build complete workflows through drag and drop, while more technical professionals can extend these workflows with custom JavaScript, API calls, and modular components.

In the context of AI and agentic automation, n8n becomes even more powerful. It functions as the execution layer for agents, enabling them to use tools, call APIs, retrieve information, read documents, and trigger actions across an entire ecosystem. It also manages credentials, schedules, logging, and monitoring in a structured environment, which allows intelligent agents to operate safely and consistently within enterprise standards. Because n8n integrates seamlessly with language models and retrieval pipelines, it becomes a natural foundation for deploying RAG systems, enterprise knowledge assistants, and autonomous digital auditors. In practical terms, n8n provides both the intelligence layer and the operational backbone required to build systems that can think, retrieve, and act with precision.

Why n8n for Agents and RAG

N8n sits in a unique position between automation, data connectivity, and AI orchestration. Where standard AI tools can only generate text, n8n provides the structure needed for intelligence to connect with real-world actions. It allows you to build systems that can read, decide, and act across your ecosystem.

With n8n, you can build RAG systems, agents, and multi-step reasoning flows without deploying complex infrastructure or writing extensive code. Agents become useful because they can rely on n8n to perform tasks such as:

Calling Application Programming Interfaces (APIs)

Reading documents

Writing to databases

Triggering business processes

Executing conditional logic

Creating, retrieving, and analysing context

Storing and reusing knowledge

Applying safety and policy gates

This combination turns n8n into a practical foundation for enterprise-grade agentic automation.

From Workflows to Agents

Traditional automation follows a fixed sequence. You decide every step in advance. Agents follow a different approach. They receive a goal, interpret what tools are available, decide what to do, and execute intermediate steps independently.

However, agents still require structure, governance, and real-world integration points. This is what n8n provides. It gives agents the tools to take action, along with the controls needed to ensure reliability, transparency, and traceability.

Why RAG Matters

AI agents are only as strong as the information they can understand. Retrieval Augmented Generation, or RAG, connects AI reasoning with your organisation’s internal policies, historical findings, evidence repositories, and operational documentation. This capability is transformative for audit, compliance, risk, operations, and financial oversight. RAG systems built with n8n can:

Retrieve relevant policy sections

Match evidence to control descriptions

Detect misalignments

Draft findings using real organisational context

Validate exceptions

Support continuous monitoring

Prepare and store audit evidence

Trigger alerts based on reasoning with cited sources

Instead of relying on memorised model knowledge, your AI systems reference the documents that matter.

Who This Guide Is For

This guide is designed for professionals who are ready to move beyond static automation and towards intelligent, self-improving systems. It is particularly relevant for internal auditors, external auditors, SOX and compliance teams, risk and financial control functions, and operational leaders responsible for maintaining oversight across complex environments. Automation specialists and AI transformation teams will also find this guide valuable, especially when seeking practical ways to deploy agentic systems within existing infrastructures.

Regardless of your background, the objective is the same. You want to automate evidence collection, reduce repetitive work, and create workflows capable of reasoning with organisational knowledge. Whether your goal is to build a simple retrieval assistant or a fully autonomous digital auditor, this guide provides the foundation to design agentic systems that operate with reliability and governance.

The Art of the Possible with n8n Agents

Combining agentic intelligence with n8n’s orchestration capabilities unlocks a range of new possibilities that extend far beyond traditional workflow automation. For example, an agent can interpret policy requirements, perform live control tests, and raise exceptions based on evidence retrieved from multiple systems. A RAG-enhanced assistant can assemble audit documentation automatically by extracting relevant wording from internal repositories and mapping it to evidence already collected.

Reconciliation agents can compare data from several sources and resolve differences without human intervention. Daily SOX evidence bots can run quietly in the background, preparing complete documentation packs long before a control owner requests them. Even governance-focused agents can validate business decisions before execution, ensuring alignment with internal standards.

These capabilities fundamentally reshape the relationship between human judgement and system automation. Instead of replacing human insight, n8n agents elevate it. Routine work is delegated to the system, while auditors and analysts focus on interpretation, investigation, and strategic oversight.

Building a Culture of Agentic Thinking

The purpose of this guide extends beyond teaching n8n’s technical features. It aims to help teams adopt a mindset built around intelligent systems that can reason and act on their behalf. This requires thinking in terms of goals rather than individual steps and structuring knowledge in a way that agents can access and retrieve with precision. It involves providing agents with safe and clearly defined tools, supported by guardrails that ensure consistent and policy-aligned outcomes.

Teams must also learn to create reusable automation components that support autonomy, rather than building narrowly scoped workflows that cannot adapt or expand. Finally, designing systems that learn from organisational knowledge is essential, ensuring that agents grow more capable as documentation, processes, and policies evolve. By embracing these principles, organisations can transition from manual, task-oriented work to intelligent, proactive systems that enhance every aspect of operational assurance and audit oversight.

As you move into the next chapters, you will learn how to design and govern AI agents and RAG systems using n8n. These capabilities will allow your team to modernise processes, reduce manual effort, and strengthen oversight through intelligent automation.

2. The n8n User Interface

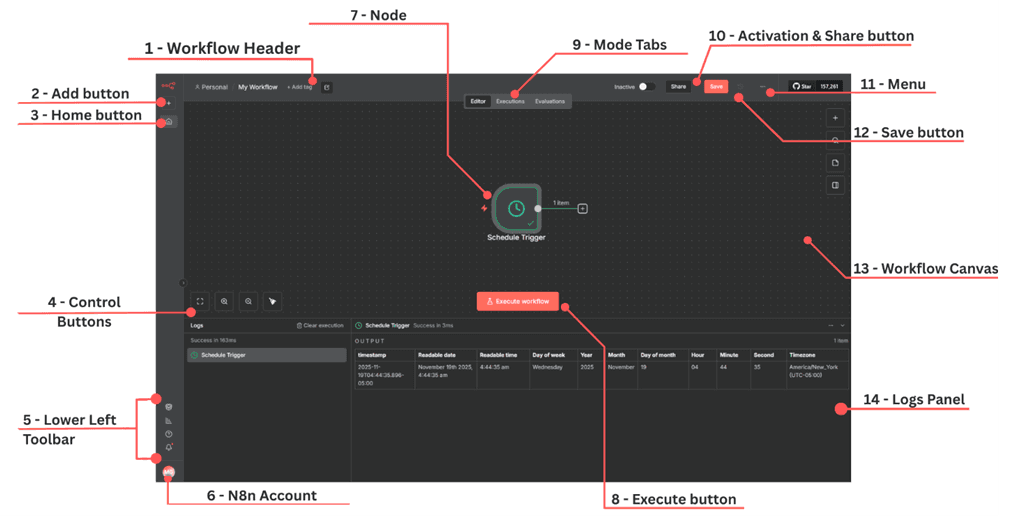

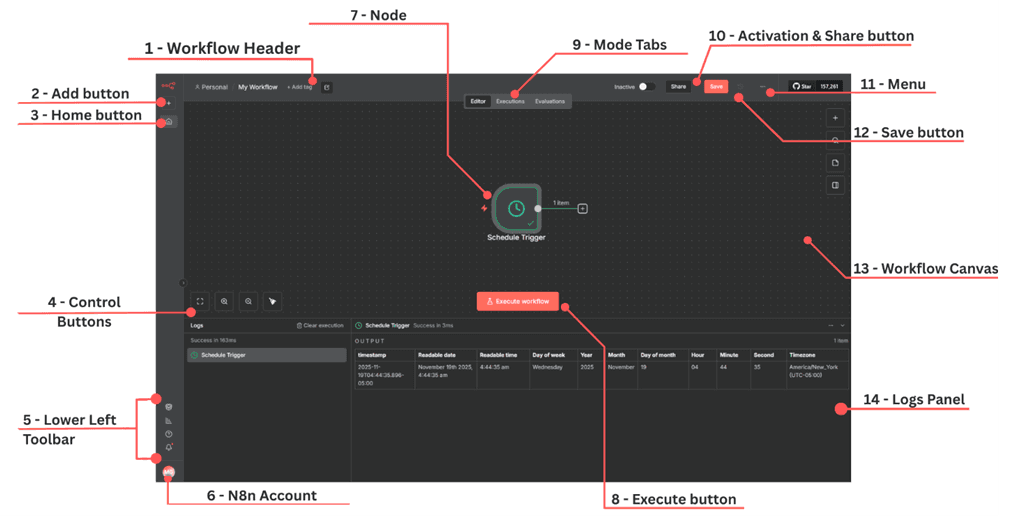

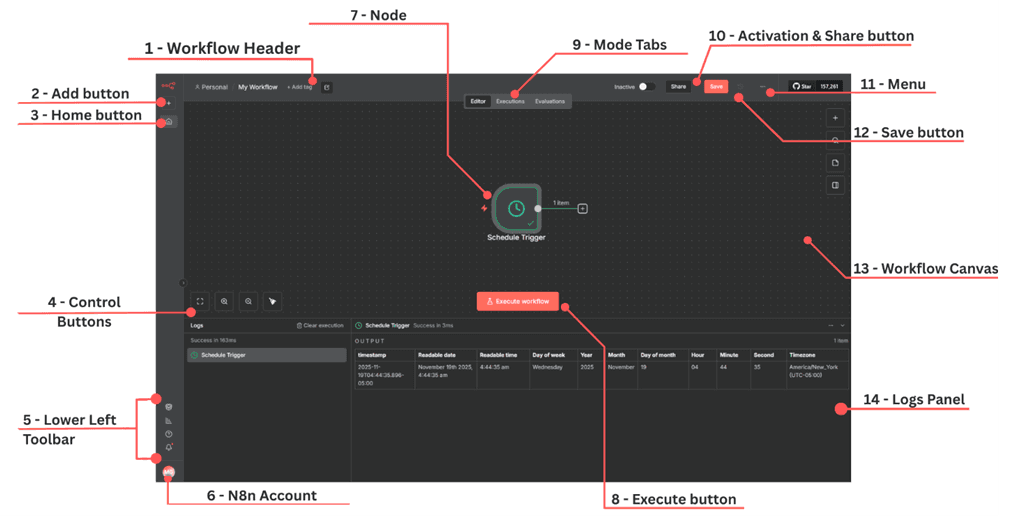

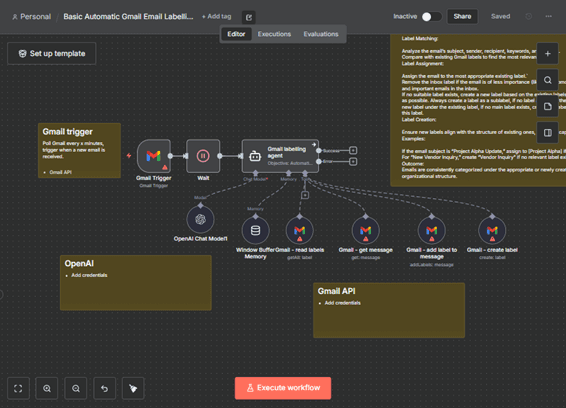

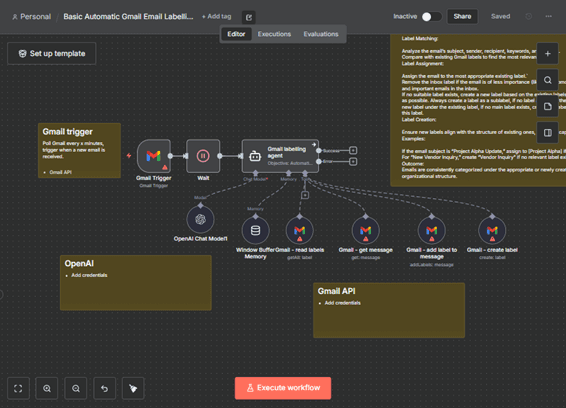

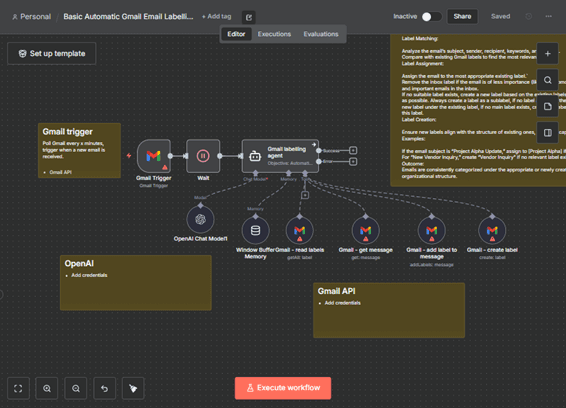

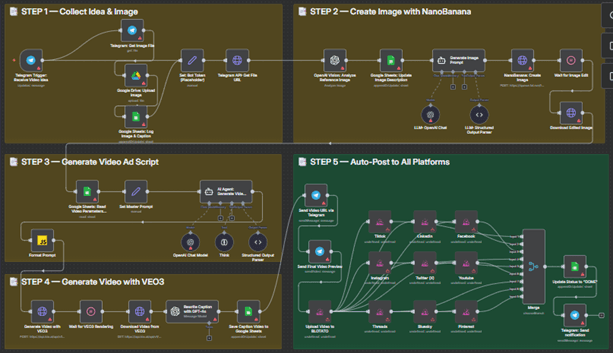

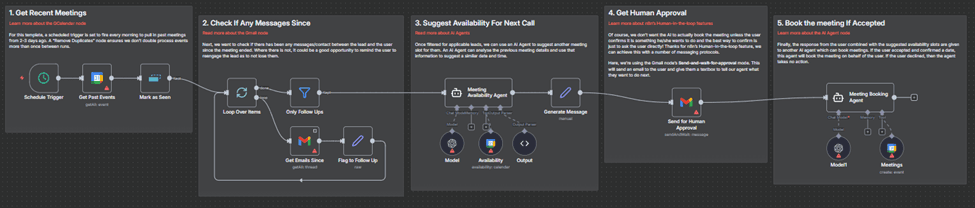

When you first open n8n, the interface is designed to give you a clear view of your workflow canvas, execution history, and essential tools. The layout is simple, visual, and focused on helping you build and evaluate workflows efficiently. The screenshot you shared contains several core components, which are described below.

Figure 3.0 — General n8n Workflow Edit User Interface

Workflow Header (Top Bar) Located at the top of the screen.

What it contains:

Workspace Location (e.g., Personal)

Workflow Name (default: “My Workflow”)

Tag Controls (Add tag)

Mode Tabs:

Editor: Build and edit workflow logic

Executions: View past workflow runs

Evaluations: View AI model-related evaluations (for agent or LLM workflows)

Activation Toggle: Switch the workflow between inactive and active

Share Button: Share the workflow or collaboration settings

Save Indicator: Shows whether your workflow has been saved

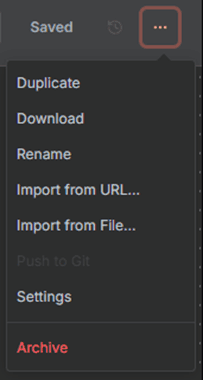

Menu (···): Additional workflow options such as versioning, exports, and settings

This bar controls workflow identity, viewing modes, activation, and general workflow settings.

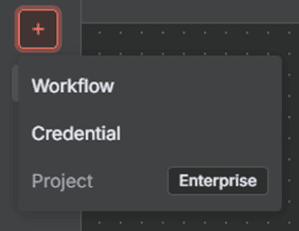

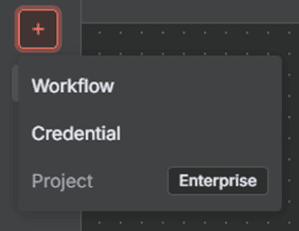

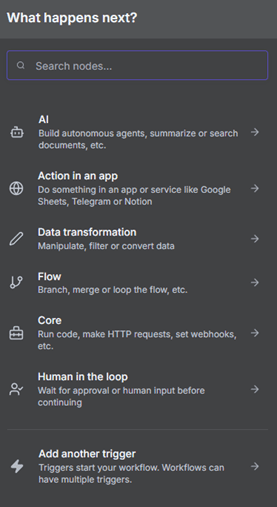

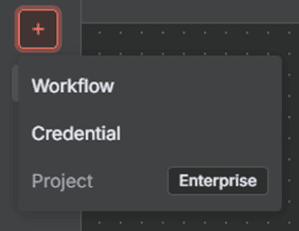

Add Button The plus symbol on the right-side toolbar.

Figure 3.1 — Add button menu options

Opens the node selector so you can create a new workflow, add new credentials, or even create a new project (only available in the enterprise version of n8n).

Home Button Located on the left vertical toolbar, it returns you to the main n8n home area where you can browse your workflows, view templates, or switch workspaces.

Control Buttons (Below Start Block) Three small buttons below the left block. They help you navigate and organise the workflow visually. These include:

Fit to Screen (expanding arrows icon) Adjusts the zoom so the full workflow is in view. Press one (1) on your keyboard as a shortcut.

Zoom In Increases magnification for detailed editing. Press the plus sign (+) on your keyboard as a shortcut.

Zoom Out Decreases magnification to see more of the workflow. Press the minus sign (+) on your keyboard as a shortcut.

Toggle Grid or Snap Behaviour (third icon in some layouts)Helps with aligning nodes neatly. Press shift (⇧ ) + Alt + T on your keyboard as a shortcut.

Figure 3.2 — Control buttons

Lower Left Toolbar The vertical set of icons on the left side of the interface. What it contains:

Templates

Insights

Help

What’s new?

Provides quick global navigation across all n8n areas.

n8n Account The circular icon with user initials at the bottom-left of the screen. Opens user account settings, workspace preferences, profile details, and other personal configuration options.

Node The central circular element shown on the canvas, for example a Schedule Trigger. Nodes represent the individual steps of your workflow. Each node performs a specific action such as retrieving data, transforming information, triggering a schedule, or invoking an AI model. Nodes are the building blocks from which complete automations and agent chains are constructed.

When you click the + button to add a node, you will see a lot of options that might suit the workflow you want to build.

Figure 3.3 — Adding a new node

Execute Button Located directly below the node.Runs the workflow manually. This is essential for testing and validating each step before the workflow is activated.

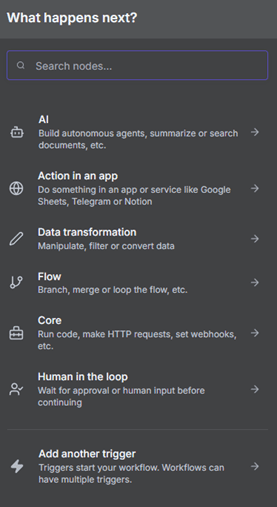

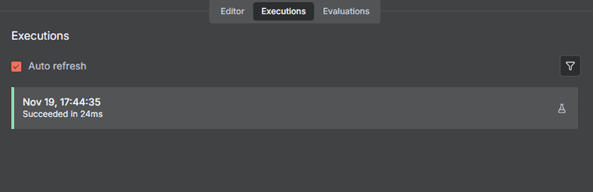

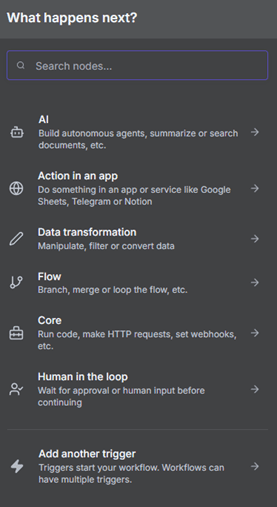

Mode Tabs Located in the workflow header just above the canvas which contains:

Editor: The design view where you build workflows

Executions: A list of past workflow runs

Figure 3.4 — Executions page

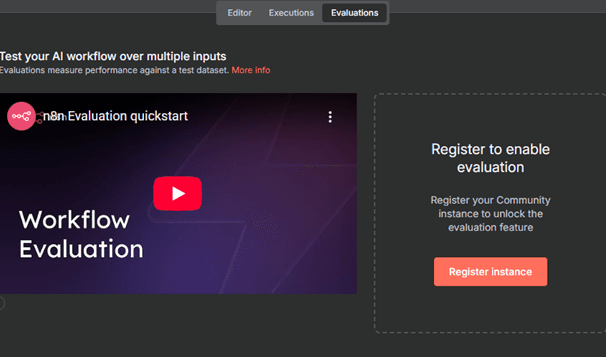

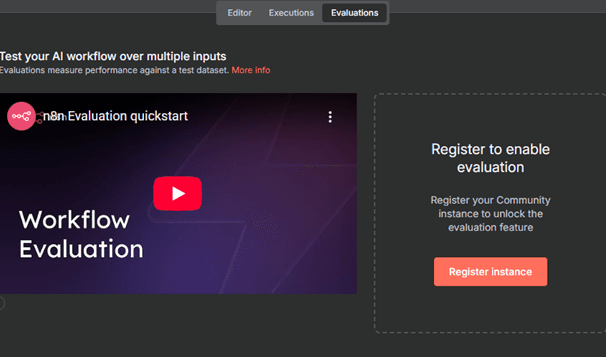

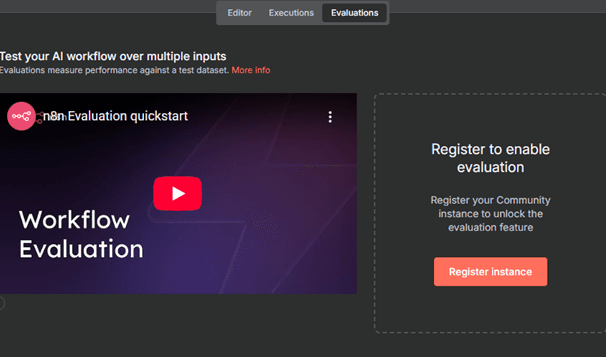

Evaluations: A review space for LLM or agent-related outputs

Figure 3.5 — Evaluations page

These tabs allow you to move between designing your workflow, viewing past behaviour, and evaluating model responses.

Activation and Share Button Found on the right side of the header. The activation toggle enables or disables the workflow so it can respond to triggers. The share button allows you to collaborate with others or manage access.

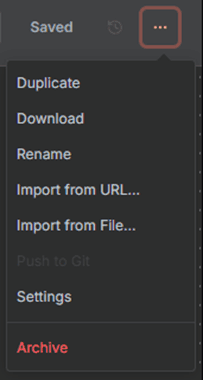

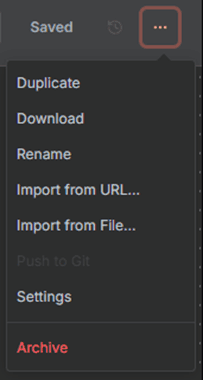

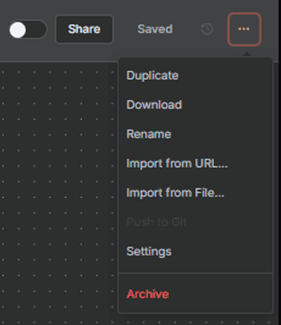

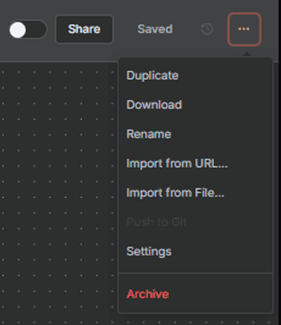

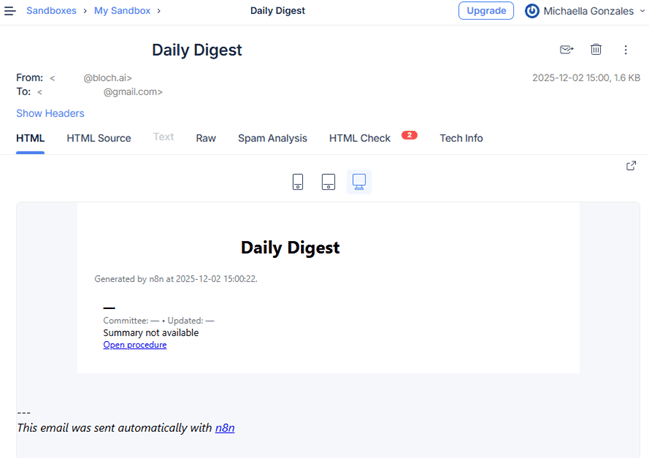

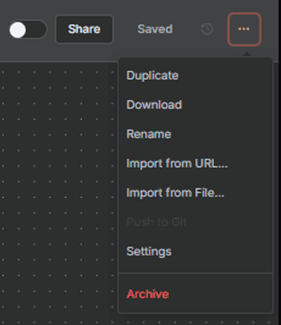

Menu Located beside the share and save controls. It has options for duplication, exporting, version history, and workflow settings.

Figure 3.6 — Menu button options

The Menu provides advanced configuration options for managing the workflow at a structural level.

Save Button Located in the header next to the share button. Confirms and stores changes made to the workflow. Even though n8n saves frequently, this button is used to ensure updates are committed explicitly.

Workflow Canvas The central dotted area of the interface where the node appears. The canvas is where workflows are designed. Nodes are added, positioned, and connected to form the automation logic. The visual layout allows you to understand how information flows through your workflow.

Logs Panel The large section at the bottom of the screen.

Figure 3.7 — Log Panel Display Example for Schedule Trigger node

Displays results and outputs from workflow execution. This includes timestamps, retrieved data, errors, conditional paths, and detailed node behaviour. For agent workflows, it may also show reasoning traces and AI decisions.

Summary Table

UI Element | Description |

|---|---|

1. Workflow Header (Top Bar) | Located at the top of the screen. Contains the workspace location, workflow name, tag controls, mode tabs (Editor, Executions, Evaluations), activation toggle, share button, save indicator, and the menu. Controls workflow identity, viewing modes, activation, and general workflow settings. |

2. Add Button | The plus symbol on the right-side toolbar. Opens the node selector where you can create a new workflow, add credentials, or create a new project (available in the enterprise version). See Figure 3.1 for menu options. |

3. Home Button | Located on the left vertical toolbar. Returns you to the main n8n home area where you can browse your workflows, access templates, or switch workspaces. |

4. Control Buttons | Found below the initial node block. Used to navigate and organise the canvas. Includes Fit to Screen (shortcut: 1), Zoom In (shortcut: +), Zoom Out (shortcut: -), and Toggle Grid/Snap (shortcut: Shift + Alt + T). See Figure 3.2 for control button icons. |

5. Lower Left Toolbar | Vertical set of icons on the far-left side. Contains Templates, Insights, Help, and What’s New. Provides global navigation across all n8n areas. |

6. n8n Account | Circular icon with user initials at the bottom-left corner. Opens account settings, profile information, workspace preferences, and organisation-related options. |

7. Node | The circular element displayed on the canvas, for example a Schedule Trigger. Represents a step in the workflow. Each node performs a specific action such as retrieving data, applying logic, triggering schedules, or invoking AI. Forms the basic building blocks of automations and agent chains. |

8. Execute Button | Located directly below the selected node. Runs the workflow manually, which is essential during testing and validation before activation. |

9. Mode Tabs | Displayed in the workflow header just above the canvas. Includes Editor (design view), Executions (past run history), and Evaluations (LLM and agent output review). Allows smooth movement between workflow design, past behaviour, and model assessment. |

10. Activation and Share Button | Positioned on the right side of the header. Activation turns the workflow on or off. Sharing allows collaboration and access control. |

11. Menu | Located beside the share and save controls. Includes options for duplication, exporting, version history, environment settings, and other workflow configuration tools. |

12. Save Button | Found next to the share button. Confirms and stores workflow changes. Although n8n autosaves frequently, this button ensures explicit commit of edits. |

13. Workflow Canvas | The dotted central area where nodes are placed. This is where workflows are designed, arranged, and connected. The canvas visually represents the logic and flow of automation. |

14. Logs Panel | The large bottom section of the interface. Displays execution results including timestamps, outputs, errors, and decision paths. For agent workflows, may also include reasoning traces and AI evaluation details. |

The n8n Management Views: Credentials, Executions, and Data Tables Beyond the workflow editor, n8n provides several dedicated management views that support automation, agent workflows, and RAG systems at an operational level. Each of these areas serves a specific purpose, helping you control the security, monitoring, and data persistence associated with your workflows. The sections below describe the key components visible in the screenshots and explain their roles within the broader automation environment.

Credentials View

The Credentials view is where all connection details for external services are created and maintained. These credentials allow workflows to authenticate securely with APIs, databases, email servers, vector stores, and AI model providers. It filters the interface to show only the credentials you own or have access to. It separates authentication assets from workflows and execution logs.

Search Bar Allows you to find credentials quickly by name. This becomes essential when working with large automation environments where dozens of authenticated connections may exist.

Sort Dropdown Sorts credentials by criteria such as last updated, creation date, or alphabetical order. This helps maintain order and supports security audits by showing which credentials were updated recently.

Filter Button Opens filtering options to narrow down credential sets. Particularly useful when working across multiple environments or departments.

Credential Cards Each card represents a single credential, such as SMTP, OpenAI, Baserow, or Gemini.

Each card contains:

Credential name

Provider or service type

Last updated date

Creation date

Workspace ownership

These cards help you understand at a glance how recently credentials were updated and which services they connect to. This is especially important for agent workflows that rely on model APIs, database connections, or RAG document stores.

Create Credential Button Located in the top right corner. Opens the credential creation window. You can configure API keys, OAuth details, or other authentication parameters. This is one of the most important controls for expanding your automation ecosystem safely.

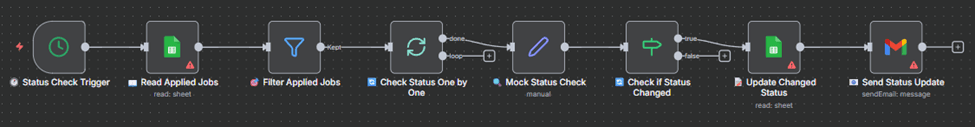

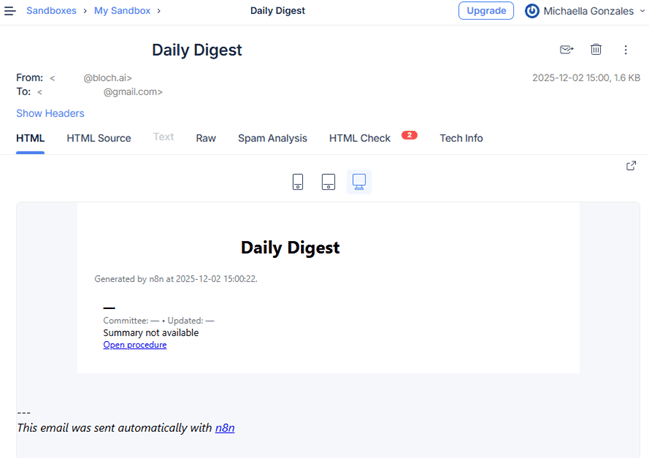

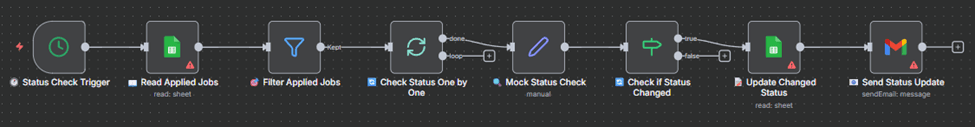

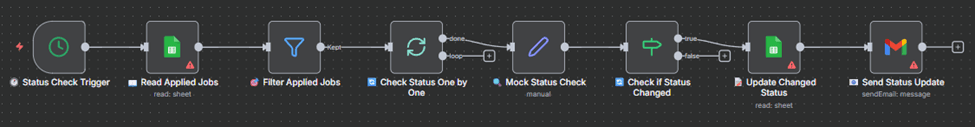

Executions View

The Executions view is essential for understanding how workflows behave over time. It provides a complete audit trail of every run, showing input conditions, outcomes, and performance. This is where you inspect workflow failures, validate success rates, and confirm that agent behaviour is consistent.

Filter Button Lets you filter execution logs by workflow name, status, time range, or other criteria. This is especially useful in environments where many workflows run frequently.

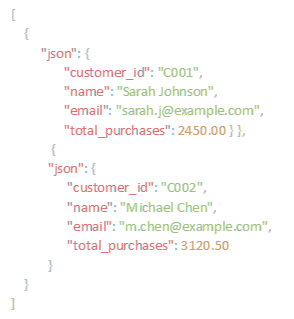

Execution Table Displays key details for every execution:

Workflow name

Status (such as Success or Error)

Start time

Run time

Execution ID

This table provides operational visibility. It helps identify issues such as timeouts, unexpected logic paths, slow-running nodes, or errors produced by AI agents.

Auto Refresh Toggle When enabled, this automatically updates the execution list without refreshing the page. This is useful when monitoring live agent workflows or scheduled tasks.

Create Workflow Button

Allows you to start a new automation directly from this view. Helps streamline operations when you notice inefficiencies in execution data.

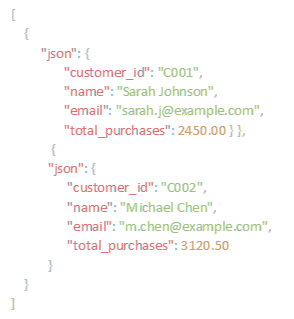

Data Tables View

Data tables allow you to store, retrieve, and share data across workflows. They are particularly important for RAG pipelines, agent memory, historical tracking, and storing evaluation metrics. This tab is often used by users building advanced automations that need to keep state or store structured information between workflow runs.

Empty State Panel

In your screenshot, no data tables are present yet. The panel explains the purpose of data tables:

Persist execution results

Share data across workflows

Store metrics for later evaluation This is crucial for agent systems that need to remember earlier outputs, store retrieval results, or maintain long-term context.

Create Data Table Button

Opens a wizard for creating a new table. You specify the schema, columns, and data types. Data tables become especially useful when:

Building RAG indexing pipelines

Storing embeddings

Recording audit logs

Capturing evaluation metrics for LLM behaviour

Saving intermediate results for multi-step agent workflows

Why These Views Matter

Together, Credentials, Executions, and Data Tables form the operational backbone of intelligent automation.

Credentials provide safe, controlled access to external systems and model providers.

Executions offer complete visibility into automation performance and agent behaviour.

Data Tables enable persistence, memory, and context for advanced workflows and RAG pipelines.

These areas ensure that workflows operate reliably, securely, and transparently, which is essential in environments involving audit, compliance, and any form of agentic automation.

2. The n8n User Interface

When you first open n8n, the interface is designed to give you a clear view of your workflow canvas, execution history, and essential tools. The layout is simple, visual, and focused on helping you build and evaluate workflows efficiently. The screenshot you shared contains several core components, which are described below.

Figure 3.0 — General n8n Workflow Edit User Interface

Workflow Header (Top Bar) Located at the top of the screen.

What it contains:

Workspace Location (e.g., Personal)

Workflow Name (default: “My Workflow”)

Tag Controls (Add tag)

Mode Tabs:

Editor: Build and edit workflow logic

Executions: View past workflow runs

Evaluations: View AI model-related evaluations (for agent or LLM workflows)

Activation Toggle: Switch the workflow between inactive and active

Share Button: Share the workflow or collaboration settings

Save Indicator: Shows whether your workflow has been saved

Menu (···): Additional workflow options such as versioning, exports, and settings

This bar controls workflow identity, viewing modes, activation, and general workflow settings.

Add Button The plus symbol on the right-side toolbar.

Figure 3.1 — Add button menu options

Opens the node selector so you can create a new workflow, add new credentials, or even create a new project (only available in the enterprise version of n8n).

Home Button Located on the left vertical toolbar, it returns you to the main n8n home area where you can browse your workflows, view templates, or switch workspaces.

Control Buttons (Below Start Block) Three small buttons below the left block. They help you navigate and organise the workflow visually. These include:

Fit to Screen (expanding arrows icon) Adjusts the zoom so the full workflow is in view. Press one (1) on your keyboard as a shortcut.

Zoom In Increases magnification for detailed editing. Press the plus sign (+) on your keyboard as a shortcut.

Zoom Out Decreases magnification to see more of the workflow. Press the minus sign (+) on your keyboard as a shortcut.

Toggle Grid or Snap Behaviour (third icon in some layouts)Helps with aligning nodes neatly. Press shift (⇧ ) + Alt + T on your keyboard as a shortcut.

Figure 3.2 — Control buttons

Lower Left Toolbar The vertical set of icons on the left side of the interface. What it contains:

Templates

Insights

Help

What’s new?

Provides quick global navigation across all n8n areas.

n8n Account The circular icon with user initials at the bottom-left of the screen. Opens user account settings, workspace preferences, profile details, and other personal configuration options.

Node The central circular element shown on the canvas, for example a Schedule Trigger. Nodes represent the individual steps of your workflow. Each node performs a specific action such as retrieving data, transforming information, triggering a schedule, or invoking an AI model. Nodes are the building blocks from which complete automations and agent chains are constructed.

When you click the + button to add a node, you will see a lot of options that might suit the workflow you want to build.

Figure 3.3 — Adding a new node

Execute Button Located directly below the node.Runs the workflow manually. This is essential for testing and validating each step before the workflow is activated.

Mode Tabs Located in the workflow header just above the canvas which contains:

Editor: The design view where you build workflows

Executions: A list of past workflow runs

Figure 3.4 — Executions page

Evaluations: A review space for LLM or agent-related outputs

Figure 3.5 — Evaluations page

These tabs allow you to move between designing your workflow, viewing past behaviour, and evaluating model responses.

Activation and Share Button Found on the right side of the header. The activation toggle enables or disables the workflow so it can respond to triggers. The share button allows you to collaborate with others or manage access.

Menu Located beside the share and save controls. It has options for duplication, exporting, version history, and workflow settings.

Figure 3.6 — Menu button options

The Menu provides advanced configuration options for managing the workflow at a structural level.

Save Button Located in the header next to the share button. Confirms and stores changes made to the workflow. Even though n8n saves frequently, this button is used to ensure updates are committed explicitly.

Workflow Canvas The central dotted area of the interface where the node appears. The canvas is where workflows are designed. Nodes are added, positioned, and connected to form the automation logic. The visual layout allows you to understand how information flows through your workflow.

Logs Panel The large section at the bottom of the screen.

Figure 3.7 — Log Panel Display Example for Schedule Trigger node

Displays results and outputs from workflow execution. This includes timestamps, retrieved data, errors, conditional paths, and detailed node behaviour. For agent workflows, it may also show reasoning traces and AI decisions.

Summary Table

UI Element | Description |

|---|---|

1. Workflow Header (Top Bar) | Located at the top of the screen. Contains the workspace location, workflow name, tag controls, mode tabs (Editor, Executions, Evaluations), activation toggle, share button, save indicator, and the menu. Controls workflow identity, viewing modes, activation, and general workflow settings. |

2. Add Button | The plus symbol on the right-side toolbar. Opens the node selector where you can create a new workflow, add credentials, or create a new project (available in the enterprise version). See Figure 3.1 for menu options. |

3. Home Button | Located on the left vertical toolbar. Returns you to the main n8n home area where you can browse your workflows, access templates, or switch workspaces. |

4. Control Buttons | Found below the initial node block. Used to navigate and organise the canvas. Includes Fit to Screen (shortcut: 1), Zoom In (shortcut: +), Zoom Out (shortcut: -), and Toggle Grid/Snap (shortcut: Shift + Alt + T). See Figure 3.2 for control button icons. |

5. Lower Left Toolbar | Vertical set of icons on the far-left side. Contains Templates, Insights, Help, and What’s New. Provides global navigation across all n8n areas. |

6. n8n Account | Circular icon with user initials at the bottom-left corner. Opens account settings, profile information, workspace preferences, and organisation-related options. |

7. Node | The circular element displayed on the canvas, for example a Schedule Trigger. Represents a step in the workflow. Each node performs a specific action such as retrieving data, applying logic, triggering schedules, or invoking AI. Forms the basic building blocks of automations and agent chains. |

8. Execute Button | Located directly below the selected node. Runs the workflow manually, which is essential during testing and validation before activation. |

9. Mode Tabs | Displayed in the workflow header just above the canvas. Includes Editor (design view), Executions (past run history), and Evaluations (LLM and agent output review). Allows smooth movement between workflow design, past behaviour, and model assessment. |

10. Activation and Share Button | Positioned on the right side of the header. Activation turns the workflow on or off. Sharing allows collaboration and access control. |

11. Menu | Located beside the share and save controls. Includes options for duplication, exporting, version history, environment settings, and other workflow configuration tools. |

12. Save Button | Found next to the share button. Confirms and stores workflow changes. Although n8n autosaves frequently, this button ensures explicit commit of edits. |

13. Workflow Canvas | The dotted central area where nodes are placed. This is where workflows are designed, arranged, and connected. The canvas visually represents the logic and flow of automation. |

14. Logs Panel | The large bottom section of the interface. Displays execution results including timestamps, outputs, errors, and decision paths. For agent workflows, may also include reasoning traces and AI evaluation details. |

The n8n Management Views: Credentials, Executions, and Data Tables Beyond the workflow editor, n8n provides several dedicated management views that support automation, agent workflows, and RAG systems at an operational level. Each of these areas serves a specific purpose, helping you control the security, monitoring, and data persistence associated with your workflows. The sections below describe the key components visible in the screenshots and explain their roles within the broader automation environment.

Credentials View

The Credentials view is where all connection details for external services are created and maintained. These credentials allow workflows to authenticate securely with APIs, databases, email servers, vector stores, and AI model providers. It filters the interface to show only the credentials you own or have access to. It separates authentication assets from workflows and execution logs.

Search Bar Allows you to find credentials quickly by name. This becomes essential when working with large automation environments where dozens of authenticated connections may exist.

Sort Dropdown Sorts credentials by criteria such as last updated, creation date, or alphabetical order. This helps maintain order and supports security audits by showing which credentials were updated recently.

Filter Button Opens filtering options to narrow down credential sets. Particularly useful when working across multiple environments or departments.

Credential Cards Each card represents a single credential, such as SMTP, OpenAI, Baserow, or Gemini.

Each card contains:

Credential name

Provider or service type

Last updated date

Creation date

Workspace ownership

These cards help you understand at a glance how recently credentials were updated and which services they connect to. This is especially important for agent workflows that rely on model APIs, database connections, or RAG document stores.

Create Credential Button Located in the top right corner. Opens the credential creation window. You can configure API keys, OAuth details, or other authentication parameters. This is one of the most important controls for expanding your automation ecosystem safely.

Executions View

The Executions view is essential for understanding how workflows behave over time. It provides a complete audit trail of every run, showing input conditions, outcomes, and performance. This is where you inspect workflow failures, validate success rates, and confirm that agent behaviour is consistent.

Filter Button Lets you filter execution logs by workflow name, status, time range, or other criteria. This is especially useful in environments where many workflows run frequently.

Execution Table Displays key details for every execution:

Workflow name

Status (such as Success or Error)

Start time

Run time

Execution ID

This table provides operational visibility. It helps identify issues such as timeouts, unexpected logic paths, slow-running nodes, or errors produced by AI agents.

Auto Refresh Toggle When enabled, this automatically updates the execution list without refreshing the page. This is useful when monitoring live agent workflows or scheduled tasks.

Create Workflow Button

Allows you to start a new automation directly from this view. Helps streamline operations when you notice inefficiencies in execution data.

Data Tables View

Data tables allow you to store, retrieve, and share data across workflows. They are particularly important for RAG pipelines, agent memory, historical tracking, and storing evaluation metrics. This tab is often used by users building advanced automations that need to keep state or store structured information between workflow runs.

Empty State Panel

In your screenshot, no data tables are present yet. The panel explains the purpose of data tables:

Persist execution results

Share data across workflows

Store metrics for later evaluation This is crucial for agent systems that need to remember earlier outputs, store retrieval results, or maintain long-term context.

Create Data Table Button

Opens a wizard for creating a new table. You specify the schema, columns, and data types. Data tables become especially useful when:

Building RAG indexing pipelines

Storing embeddings

Recording audit logs

Capturing evaluation metrics for LLM behaviour

Saving intermediate results for multi-step agent workflows

Why These Views Matter

Together, Credentials, Executions, and Data Tables form the operational backbone of intelligent automation.

Credentials provide safe, controlled access to external systems and model providers.

Executions offer complete visibility into automation performance and agent behaviour.

Data Tables enable persistence, memory, and context for advanced workflows and RAG pipelines.

These areas ensure that workflows operate reliably, securely, and transparently, which is essential in environments involving audit, compliance, and any form of agentic automation.

2. The n8n User Interface

When you first open n8n, the interface is designed to give you a clear view of your workflow canvas, execution history, and essential tools. The layout is simple, visual, and focused on helping you build and evaluate workflows efficiently. The screenshot you shared contains several core components, which are described below.

Figure 3.0 — General n8n Workflow Edit User Interface

Workflow Header (Top Bar) Located at the top of the screen.

What it contains:

Workspace Location (e.g., Personal)

Workflow Name (default: “My Workflow”)

Tag Controls (Add tag)

Mode Tabs:

Editor: Build and edit workflow logic

Executions: View past workflow runs

Evaluations: View AI model-related evaluations (for agent or LLM workflows)

Activation Toggle: Switch the workflow between inactive and active

Share Button: Share the workflow or collaboration settings

Save Indicator: Shows whether your workflow has been saved

Menu (···): Additional workflow options such as versioning, exports, and settings

This bar controls workflow identity, viewing modes, activation, and general workflow settings.

Add Button The plus symbol on the right-side toolbar.

Figure 3.1 — Add button menu options

Opens the node selector so you can create a new workflow, add new credentials, or even create a new project (only available in the enterprise version of n8n).

Home Button Located on the left vertical toolbar, it returns you to the main n8n home area where you can browse your workflows, view templates, or switch workspaces.

Control Buttons (Below Start Block) Three small buttons below the left block. They help you navigate and organise the workflow visually. These include:

Fit to Screen (expanding arrows icon) Adjusts the zoom so the full workflow is in view. Press one (1) on your keyboard as a shortcut.

Zoom In Increases magnification for detailed editing. Press the plus sign (+) on your keyboard as a shortcut.

Zoom Out Decreases magnification to see more of the workflow. Press the minus sign (+) on your keyboard as a shortcut.

Toggle Grid or Snap Behaviour (third icon in some layouts)Helps with aligning nodes neatly. Press shift (⇧ ) + Alt + T on your keyboard as a shortcut.

Figure 3.2 — Control buttons

Lower Left Toolbar The vertical set of icons on the left side of the interface. What it contains:

Templates

Insights

Help

What’s new?

Provides quick global navigation across all n8n areas.

n8n Account The circular icon with user initials at the bottom-left of the screen. Opens user account settings, workspace preferences, profile details, and other personal configuration options.

Node The central circular element shown on the canvas, for example a Schedule Trigger. Nodes represent the individual steps of your workflow. Each node performs a specific action such as retrieving data, transforming information, triggering a schedule, or invoking an AI model. Nodes are the building blocks from which complete automations and agent chains are constructed.

When you click the + button to add a node, you will see a lot of options that might suit the workflow you want to build.

Figure 3.3 — Adding a new node

Execute Button Located directly below the node.Runs the workflow manually. This is essential for testing and validating each step before the workflow is activated.

Mode Tabs Located in the workflow header just above the canvas which contains:

Editor: The design view where you build workflows

Executions: A list of past workflow runs

Figure 3.4 — Executions page

Evaluations: A review space for LLM or agent-related outputs

Figure 3.5 — Evaluations page

These tabs allow you to move between designing your workflow, viewing past behaviour, and evaluating model responses.

Activation and Share Button Found on the right side of the header. The activation toggle enables or disables the workflow so it can respond to triggers. The share button allows you to collaborate with others or manage access.

Menu Located beside the share and save controls. It has options for duplication, exporting, version history, and workflow settings.

Figure 3.6 — Menu button options

The Menu provides advanced configuration options for managing the workflow at a structural level.

Save Button Located in the header next to the share button. Confirms and stores changes made to the workflow. Even though n8n saves frequently, this button is used to ensure updates are committed explicitly.

Workflow Canvas The central dotted area of the interface where the node appears. The canvas is where workflows are designed. Nodes are added, positioned, and connected to form the automation logic. The visual layout allows you to understand how information flows through your workflow.

Logs Panel The large section at the bottom of the screen.

Figure 3.7 — Log Panel Display Example for Schedule Trigger node

Displays results and outputs from workflow execution. This includes timestamps, retrieved data, errors, conditional paths, and detailed node behaviour. For agent workflows, it may also show reasoning traces and AI decisions.

Summary Table

UI Element | Description |

|---|---|

1. Workflow Header (Top Bar) | Located at the top of the screen. Contains the workspace location, workflow name, tag controls, mode tabs (Editor, Executions, Evaluations), activation toggle, share button, save indicator, and the menu. Controls workflow identity, viewing modes, activation, and general workflow settings. |

2. Add Button | The plus symbol on the right-side toolbar. Opens the node selector where you can create a new workflow, add credentials, or create a new project (available in the enterprise version). See Figure 3.1 for menu options. |

3. Home Button | Located on the left vertical toolbar. Returns you to the main n8n home area where you can browse your workflows, access templates, or switch workspaces. |

4. Control Buttons | Found below the initial node block. Used to navigate and organise the canvas. Includes Fit to Screen (shortcut: 1), Zoom In (shortcut: +), Zoom Out (shortcut: -), and Toggle Grid/Snap (shortcut: Shift + Alt + T). See Figure 3.2 for control button icons. |

5. Lower Left Toolbar | Vertical set of icons on the far-left side. Contains Templates, Insights, Help, and What’s New. Provides global navigation across all n8n areas. |

6. n8n Account | Circular icon with user initials at the bottom-left corner. Opens account settings, profile information, workspace preferences, and organisation-related options. |

7. Node | The circular element displayed on the canvas, for example a Schedule Trigger. Represents a step in the workflow. Each node performs a specific action such as retrieving data, applying logic, triggering schedules, or invoking AI. Forms the basic building blocks of automations and agent chains. |

8. Execute Button | Located directly below the selected node. Runs the workflow manually, which is essential during testing and validation before activation. |

9. Mode Tabs | Displayed in the workflow header just above the canvas. Includes Editor (design view), Executions (past run history), and Evaluations (LLM and agent output review). Allows smooth movement between workflow design, past behaviour, and model assessment. |

10. Activation and Share Button | Positioned on the right side of the header. Activation turns the workflow on or off. Sharing allows collaboration and access control. |

11. Menu | Located beside the share and save controls. Includes options for duplication, exporting, version history, environment settings, and other workflow configuration tools. |

12. Save Button | Found next to the share button. Confirms and stores workflow changes. Although n8n autosaves frequently, this button ensures explicit commit of edits. |

13. Workflow Canvas | The dotted central area where nodes are placed. This is where workflows are designed, arranged, and connected. The canvas visually represents the logic and flow of automation. |

14. Logs Panel | The large bottom section of the interface. Displays execution results including timestamps, outputs, errors, and decision paths. For agent workflows, may also include reasoning traces and AI evaluation details. |

The n8n Management Views: Credentials, Executions, and Data Tables Beyond the workflow editor, n8n provides several dedicated management views that support automation, agent workflows, and RAG systems at an operational level. Each of these areas serves a specific purpose, helping you control the security, monitoring, and data persistence associated with your workflows. The sections below describe the key components visible in the screenshots and explain their roles within the broader automation environment.

Credentials View

The Credentials view is where all connection details for external services are created and maintained. These credentials allow workflows to authenticate securely with APIs, databases, email servers, vector stores, and AI model providers. It filters the interface to show only the credentials you own or have access to. It separates authentication assets from workflows and execution logs.

Search Bar Allows you to find credentials quickly by name. This becomes essential when working with large automation environments where dozens of authenticated connections may exist.

Sort Dropdown Sorts credentials by criteria such as last updated, creation date, or alphabetical order. This helps maintain order and supports security audits by showing which credentials were updated recently.

Filter Button Opens filtering options to narrow down credential sets. Particularly useful when working across multiple environments or departments.

Credential Cards Each card represents a single credential, such as SMTP, OpenAI, Baserow, or Gemini.

Each card contains:

Credential name

Provider or service type

Last updated date

Creation date

Workspace ownership

These cards help you understand at a glance how recently credentials were updated and which services they connect to. This is especially important for agent workflows that rely on model APIs, database connections, or RAG document stores.

Create Credential Button Located in the top right corner. Opens the credential creation window. You can configure API keys, OAuth details, or other authentication parameters. This is one of the most important controls for expanding your automation ecosystem safely.

Executions View

The Executions view is essential for understanding how workflows behave over time. It provides a complete audit trail of every run, showing input conditions, outcomes, and performance. This is where you inspect workflow failures, validate success rates, and confirm that agent behaviour is consistent.

Filter Button Lets you filter execution logs by workflow name, status, time range, or other criteria. This is especially useful in environments where many workflows run frequently.

Execution Table Displays key details for every execution:

Workflow name

Status (such as Success or Error)

Start time

Run time

Execution ID

This table provides operational visibility. It helps identify issues such as timeouts, unexpected logic paths, slow-running nodes, or errors produced by AI agents.

Auto Refresh Toggle When enabled, this automatically updates the execution list without refreshing the page. This is useful when monitoring live agent workflows or scheduled tasks.

Create Workflow Button

Allows you to start a new automation directly from this view. Helps streamline operations when you notice inefficiencies in execution data.

Data Tables View

Data tables allow you to store, retrieve, and share data across workflows. They are particularly important for RAG pipelines, agent memory, historical tracking, and storing evaluation metrics. This tab is often used by users building advanced automations that need to keep state or store structured information between workflow runs.

Empty State Panel

In your screenshot, no data tables are present yet. The panel explains the purpose of data tables:

Persist execution results

Share data across workflows

Store metrics for later evaluation This is crucial for agent systems that need to remember earlier outputs, store retrieval results, or maintain long-term context.

Create Data Table Button

Opens a wizard for creating a new table. You specify the schema, columns, and data types. Data tables become especially useful when:

Building RAG indexing pipelines

Storing embeddings

Recording audit logs

Capturing evaluation metrics for LLM behaviour

Saving intermediate results for multi-step agent workflows

Why These Views Matter

Together, Credentials, Executions, and Data Tables form the operational backbone of intelligent automation.

Credentials provide safe, controlled access to external systems and model providers.

Executions offer complete visibility into automation performance and agent behaviour.

Data Tables enable persistence, memory, and context for advanced workflows and RAG pipelines.

These areas ensure that workflows operate reliably, securely, and transparently, which is essential in environments involving audit, compliance, and any form of agentic automation.

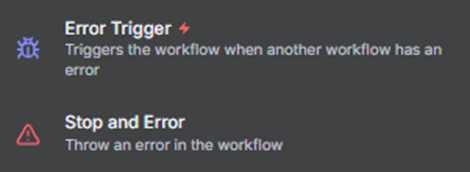

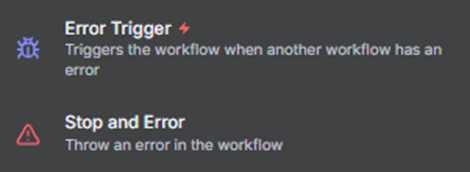

3. Nodes

Nodes are the fundamental building blocks of every workflow in n8n. They define how information moves, how decisions are made, and how actions are executed. If the workflow canvas is the environment in which ideas take shape, then nodes are the individual components that turn those ideas into structured logic. Much like KNIME’s node-centric approach, n8n uses nodes to represent each step in a process, creating a transparent and traceable pathway from input to outcome.

Nodes allow you to design workflows in a visual way, making even complex automations easier to follow. Each node performs a distinct task. Some nodes retrieve information, others evaluate conditions, some transform or enrich data, and others connect to models, databases, or document stores. Together, they form a chain that reflects the way work is carried out within your organisation.

This is especially important for agentic automation and RAG systems. In these modern workflows, nodes do far more than simply move data from one point to another. They support retrieval, prompt construction, safety controls, vector searches, and model reasoning. They allow teams to shape intelligent behaviour without requiring extensive code. Nodes become the tools through which agents interpret information, select next actions, and apply organisational knowledge.

Understanding How Nodes Function

Each node in n8n has an internal role, which is defined by its inputs, operations, and outputs.

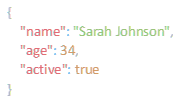

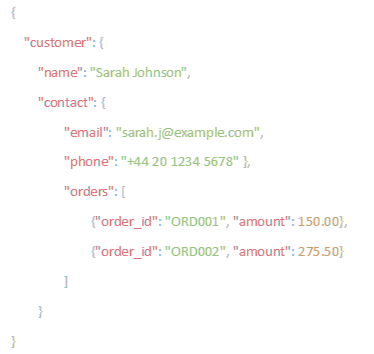

Inputs Nodes typically receive data from the previous step in the workflow. This could be a set of records, a document, an API response, or a piece of text.

Operations The node applies logic or executes an action based on its configuration. For example, it may filter results, call an API, create an embedding, classify a passage, or choose between alternative branches.

Outputs The node provides structured output that passes forward into the next step. This output may include text, arrays, metadata, embeddings, extracted values, or processed files.

This input–operation–output pattern is what gives n8n its clarity. Every transformation is visible, every action is explicit, and every decision can be traced. For audit, compliance, and assurance functions, this level of traceability is particularly valuable because it allows teams to understand exactly how evidence was evaluated or how an agent reached a conclusion.

Why Nodes Matter for RAG and Agentic Workflows

While traditional workflows rely on predictable, sequential logic, agentic workflows require systems that can:

Retrieve information from multiple sources

Analyse and compare content

Generate context aware decisions

Apply organisational policies

Execute multi-step reasoning

Integrate securely with external models

Nodes make each of these steps explicit and governable.

For example:

Retrieval nodes allow the agent to gather relevant text.

Transformation nodes clean and prepare the content.

Embedding nodes convert text into vector form for search.

Vector search nodes find the closest matching context.

LLM nodes generate structured reasoning or decisions.

Guardrail nodes check for quality, policy alignment, or exceptions.

By structuring these steps visually, n8n makes RAG pipelines understandable even for non-technical users. It transforms what would otherwise be hidden AI behaviour into clearly defined, auditable components.

Node Categories in n8n

Nodes in n8n can be grouped into several broad categories:

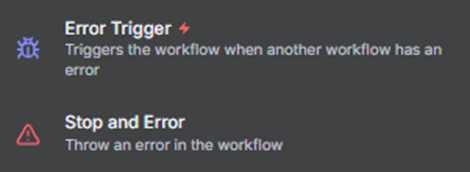

Trigger Nodes

Initiate the workflow. Examples include Schedule, Webhook, or Manual Trigger.

These are essential for timed checks, event-driven actions, or human-in-the-loop processes.

Data Acquisition Nodes

Retrieve information from systems such as HTTP endpoints, databases, SaaS platforms, or storage services.

These nodes power the retrieval side of RAG workflows.

Data Transformation Nodes

Modify, enrich, or restructure data.

Set, Function, and Item Lists are common examples.

These are often used to prepare prompts, combine retrieved content, or extract important fields.

Control Flow Nodes

Determine what happens next.

IF, Switch, Merge, and Split in Batches are used to shape logic paths or protect workflows with guardrails.

AI Nodes

Interface with language models, embedding services, vector stores, or classification engines.

These are the core of modern agentic automation.

File and Document Nodes

Handle PDFs, text files, spreadsheets, or binary content.

These nodes support document ingestion pipelines and RAG indexing.

Output Nodes

Send results to email, messaging platforms, databases, dashboards, or monitoring tools.

Each category serves a specific purpose, and many workflows require a combination of all seven.

Commonly used n8n nodes

To help you become familiar with the types of nodes most often used in RAG and agentic workflows, the following table summarises the nodes you will encounter most frequently and explains why they matter.

Node | Description | Why It Is Important for RAG and Agentic Systems |

HTTP Request | Sends HTTP calls to external APIs and receives structured responses. | Essential for connecting to model providers, vector databases, document stores, or internal APIs. Forms the backbone of most retrieval steps and agent tool actions. |

Webhook | Receives data from external systems via an incoming HTTP request. | Allows agents to respond to events, trigger RAG pipelines on demand, or process inbound documents or messages. |

Edit fields/Set Node | Creates or modifies fields within an item. | Used to prepare prompts, clean retrieved text, format embeddings, or construct structured output for LLMs. |

Function / Code Node | Executes custom JavaScript (or Python) for logic or data manipulation. | Enables advanced pre-processing, chunking documents for embedding, merging retrieved context, or instructing agents with dynamic logic. |

IF Node | Applies conditional logic based on data. | Critical for agent behaviour control. Can enforce guardrails, validate retrieved content, detect empty results, or branch based on confidence scores. |

Switch Node | Routes execution based on specific values. | Helps agents choose between multiple tools or retrieval paths, such as policy extraction vs. history lookup. |

Merge Node | Combines data streams from two or more branches. | Used to unify retrieved context from multiple sources, such as combining RAG results with metadata or internal policy references. |

Split In Batches | Processes large datasets in controlled chunks. | Important when embedding or parsing large document sets. Prevents token exhaustion and API rate issues. |

Wait Node | Pauses workflow execution for a defined period. | Useful when coordinating asynchronous RAG jobs, spaced-out retrieval, or multi-step agent loops. |

Schedule Trigger | Triggers the workflow at a scheduled interval. | Enables continuous monitoring agents, daily RAG refresh jobs, or periodic policy indexing. |

Manual Trigger | Allows users to run workflows manually. | Ideal during development of RAG flows and debugging AI behaviour. |

OpenAI / LLM Nodes | Interfaces with OpenAI, Anthropic, Gemini, or other model providers. | Core to generating reasoning, summarization, classification, or multi-step agent thinking. Often the final step consuming retrieved context. |

Email Node | Sends emails through SMTP with optional attachments and dynamic message content. | Allows agents to communicate findings, send evidence summaries, deliver exception reports, and share RAG-derived insights automatically. |

Using Nodes Together to Build Intelligent Systems

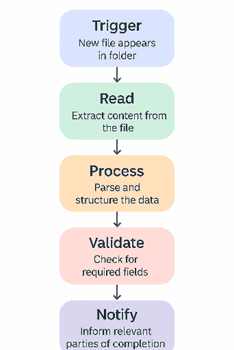

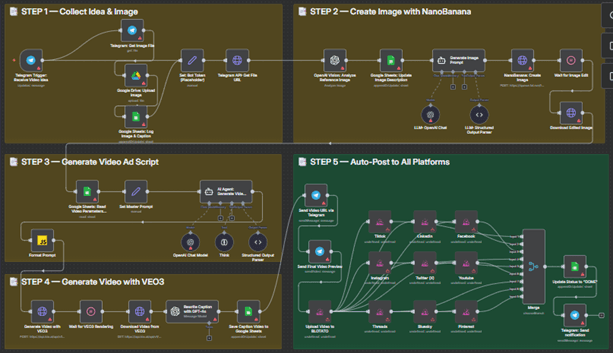

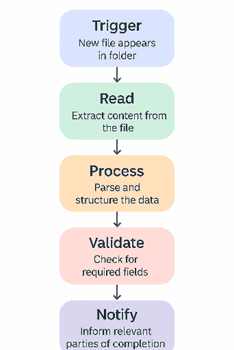

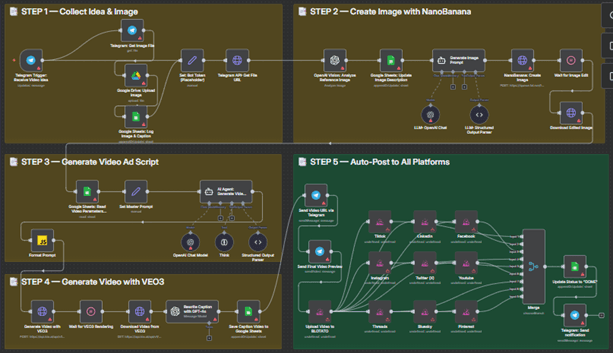

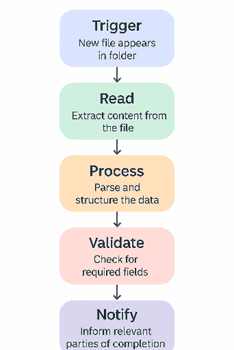

Nodes rarely operate in isolation. The strength of n8n lies in how nodes connect and pass information between one another. For example, a typical agentic or RAG workflow may involve:

A trigger node to start the workflow

An HTTP Request node to retrieve a document

A Function node to clean or split the text

An Embedding node to convert text into a vector

A Vector Search node to retrieve context

An LLM node to interpret the information

IF or Switch nodes to make controlled decisions

A storage or notification node to log results or alert stakeholders

When a red exclamation mark appears beneath a node, it signals that the node is not fully configured. You will need to complete the required fields before executing the workflow, otherwise the step will fail.

This chain-based approach allows teams to define logical, transparent, and traceable agent behaviour without needing to write a custom application.

Understanding Node Inputs and Outputs

Nodes in n8n operate by receiving data, acting on it, and passing something forward. This simple pattern is what makes workflows transparent and easy to reason about.

Inputs

Inputs are the data a node receives from the step before it. This might be text, a list of items, retrieved policy content, API responses, or extracted document sections. In RAG and agent workflows, inputs often come from retrieval steps or earlier reasoning nodes. A clear input ensures the node can run correctly and produce meaningful results.

Outputs

Outputs are the results a node produces after performing its action. These results feed directly into the next step of the workflow. Depending on the node, outputs may include transformed text, filtered items, database records, retrieved context, embeddings, or LLM-generated reasoning.

How Nodes Differ

Not every node handles data in the same way.

Transformation nodes (such as Set or Function) reshape content.

Acquisition nodes (such as HTTP Request) bring in new information.

Control nodes (such as IF or Switch) route data along different paths.

AI nodes generate reasoning or embeddings that feed the RAG process.

Document nodes extract or process file content.

Each node in n8n behaves slightly differently. The number of inputs, the format they accept, and the descriptions shown in the interface will vary depending on the node’s purpose. Exploration is an important part of learning n8n, and we encourage you to try different nodes to become familiar with their structure and functions.

Understanding how each type moves data forward helps you build workflows that are reliable, interpretable, and ready for more advanced agentic behaviour.

Building Confidence with creating workflows with Nodes

As you begin working with nodes, you will notice that even the most advanced workflows are built from simple, understandable steps. Each node performs a clear action, and together they create logic that is both transparent and reproducible. This structure is what allows n8n to support intelligent automation with confidence. RAG pipelines become readable. Agent reasoning becomes explicit. Decision paths become traceable. Instead of hiding logic inside code or complex scripts, n8n shows precisely how information is retrieved, transformed, evaluated, and acted upon.

For many teams, this transparency becomes the foundation for building more ambitious workflows. Once you understand how nodes behave and how they pass information from one step to the next, you can begin to design systems that reason with organisational knowledge, perform complex comparisons, or automate entire evidence-gathering processes. The learning curve becomes less about technical skill and more about thinking clearly and structuring your ideas.

3. Nodes

Nodes are the fundamental building blocks of every workflow in n8n. They define how information moves, how decisions are made, and how actions are executed. If the workflow canvas is the environment in which ideas take shape, then nodes are the individual components that turn those ideas into structured logic. Much like KNIME’s node-centric approach, n8n uses nodes to represent each step in a process, creating a transparent and traceable pathway from input to outcome.

Nodes allow you to design workflows in a visual way, making even complex automations easier to follow. Each node performs a distinct task. Some nodes retrieve information, others evaluate conditions, some transform or enrich data, and others connect to models, databases, or document stores. Together, they form a chain that reflects the way work is carried out within your organisation.

This is especially important for agentic automation and RAG systems. In these modern workflows, nodes do far more than simply move data from one point to another. They support retrieval, prompt construction, safety controls, vector searches, and model reasoning. They allow teams to shape intelligent behaviour without requiring extensive code. Nodes become the tools through which agents interpret information, select next actions, and apply organisational knowledge.

Understanding How Nodes Function

Each node in n8n has an internal role, which is defined by its inputs, operations, and outputs.

Inputs Nodes typically receive data from the previous step in the workflow. This could be a set of records, a document, an API response, or a piece of text.

Operations The node applies logic or executes an action based on its configuration. For example, it may filter results, call an API, create an embedding, classify a passage, or choose between alternative branches.