KNIME

KNIME

KNIME

Getting Started: KNIME

Our KNIME Primer for Finance and Audit Teams

1. Introducing the KNIME Analytics Platform

Who this guide is for

Finance and audit teams who are:

– Still using large, fragile spreadsheets for recurring tasks

– New to KNIME and want a simple, practical starting pointWhat you’ll be able to do after this guide

– Install KNIME and understand the main screen

– Build a first workflow that replaces a spreadsheet task

– Know where to look for logs, errors, and performance issues

If you’re brand new to KNIME: Do sections 1–4, then jump to 7 to build your first workflow.

If KNIME is already installed: Skim 1, then go to 3, 4 and 7.

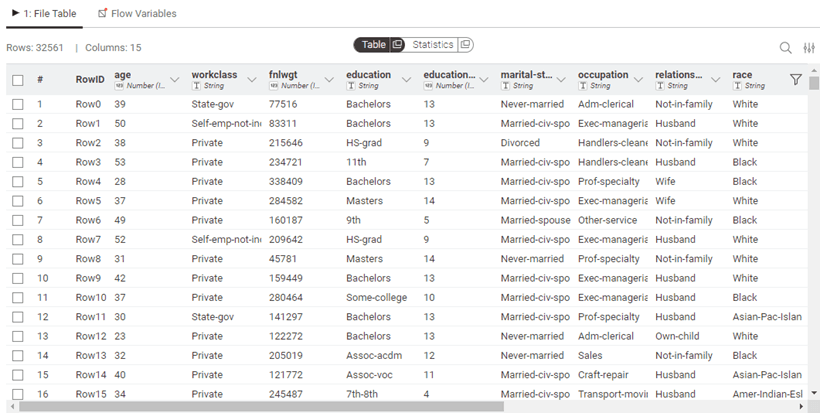

The Transition from Spreadsheets to Workflow Automation

If you're still doing monthly reporting in Excel, you already know the problems: formulas break when someone adds a row, version control is a nightmare, and good luck explaining to an auditor exactly how you got from A to B.

KNIME fixes this. It's a free, visual workflow tool that turns your manual spreadsheet processes into documented, repeatable automations. Every step is visible. Every calculation is traceable. And when next month arrives, you press one button instead of rebuilding from scratch.

We've trained hundreds of finance and audit professionals on KNIME. This guide covers what you need to get started.

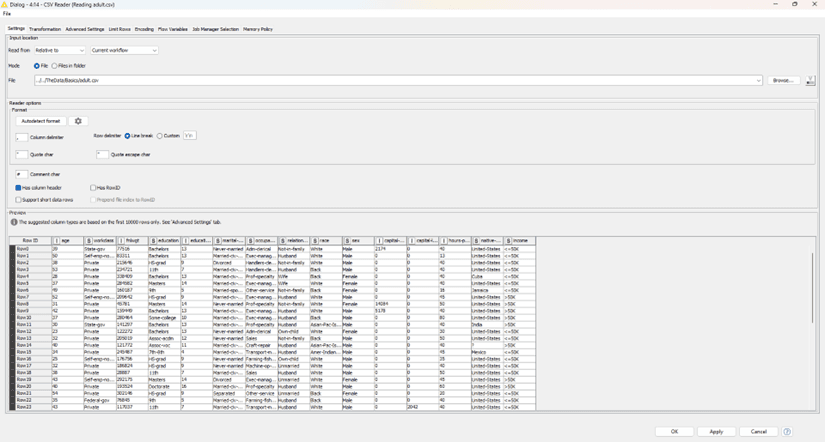

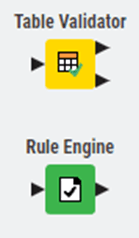

Figure 1

What is the KNIME Analytics Platform?

The KNIME Analytics Platform is an open-source, visual environment for building data workflows. Instead of writing long scripts or typing endless little commands, you connect nodes, which are small functional blocks that let you prepare data, analyse it, model it, and even deploy it.

In simpler terms: think of KNIME as a canvas where you drag, drop, and connect steps that transform your data from raw to meaningful. It brings together what many people normally do in spreadsheets, SQL, Python, and BI tools, and puts it all in one place in a visual and easy-to-follow workspace.

What it can do:

Read data from almost any source (files, databases, APIs, cloud services).

Clean, reshape, and validate data with no-code or low-code logic.

Build machine learning models and evaluate them.

Automate repetitive data tasks.

Produce reports, dashboards, and services others can reuse.

Why does KNIME matter?

Data work can be messy, scattered, and slow. It often ends up locked inside spreadsheets or scripts that only one person truly understands.KNIME exists to make analytics transparent, repeatable, and shareable for anyone who works with data.

From our training sessions: The audit trail benefit is undersold. When we train internal audit teams, the first "aha moment" is usually when they realise they can show exactly how a number was calculated. Excel hides that logic across cell formulas in multiple tabs. KNIME makes it visible in one workflow.

Key reasons people choose KNIME:

No-code or low-code accessibility: You do not need to be a developer to build powerful workflows. KNIME lets you create solutions visually, step by step.

End-to-end coverage: From data preparation and blending to modelling, reporting, and deployment, everything happens within a single workflow that you can see and understand.

Open and extendable: KNIME connects seamlessly with Python, R, SQL, cloud platforms, and modern machine learning libraries. It works with the tools you already use.

Reusability: Once a workflow is built, it can be run again with new data and the same logic. There is no need to recreate work.

Auditability and trust: Every action is visible, documented, and traceable. This creates confidence in both the process and the results.

Why organisations love it: KNIME reduces manual tasks, lowers the risk of errors, and helps teams share knowledge rather than keeping it hidden inside siloed tools. It brings clarity and structure to data work, which leads to better decisions and more reliable outcomes.

When should you use the KNIME Analytics Platform?

You can think of KNIME as your go-to tool whenever you're fed up wrestling with the same spreadsheet that you are using for semi-automated data processing (like reconciliations) and want to get rid of the errors from all those manual cut and paste and interventions.

Great use cases

When your data comes from several sources and needs to be combined in a reliable way, KNIME becomes a helpful choice. It is also useful when spreadsheets start to grow too large, too slow, or too fragile to manage. If you find yourself repeating the same tasks, such as monthly reporting or routine data preparation, KNIME helps automate these steps. It also provides a clear and transparent flow of logic that you can easily understand or share with others. When you want to explore machine learning without diving into a full coding environment, KNIME offers a practical starting point. And when you need to share experiments, prototypes, or proofs of concept quickly, KNIME makes that process simple and straightforward.

Typical workflow moments

Workflow Stage | How KNIME Helps |

|---|---|

Start of a project | Explore messy or unfamiliar data, test ideas, and build understanding before committing to deeper analysis. |

Daily or weekly cycles | Clean, validate, and distribute information consistently, reducing repeated manual work. |

Advanced analytics | Build predictive models, apply algorithms, evaluate results, and keep everything documented in a clear workflow. |

Operationalisation | Move analytics into production, integrate with enterprise systems, and automate processes for reliable long-term use. |

Where can KNIME be used?

KNIME fits naturally wherever data needs to be understood, cleaned, combined, or transformed. It is not tied to a single industry or a specific type of system. Instead, it adapts to the environment you already work in. Many people begin by installing KNIME on a laptop and using it as their personal analysis space. From there, it scales comfortably into larger ecosystems such as on-premise databases, cloud data warehouses, or secure enterprise platforms. The experience remains consistent, since you build workflows visually regardless of where the data resides.

Across industries, the tasks may vary, but the underlying challenges are often the same. A financial analyst might use KNIME to reconcile ledgers across multiple systems, while a marketer uses it to bring together campaign metrics from several platforms. Manufacturing teams blend sensor readings with quality data. Healthcare teams process clinical records. Retailers explore sales patterns. The subject matter may change, but the need for a clear and repeatable way to work with information stays constant.

KNIME is equally flexible in technical environments. It can read from a simple local file on your machine, but it also connects to cloud storage, enterprise applications, and APIs when the situation calls for it. And when teams need automation or collaboration, workflows can move easily from a local computer to a KNIME Business Hub, where they can run on a schedule, power dashboards, or act as shared tools for the wider organisation. In other words, KNIME works wherever your data lives, whether that is a single spreadsheet or a fully equipped enterprise infrastructure.

Who can use KNIME?

KNIME is designed for anyone who works with data, regardless of skill level. Beginners appreciate its visual, step by step approach, which makes analysis easy to learn and encourages confidence without needing to write code. Tasks that feel familiar in spreadsheets become clear visual actions in a workflow.

Technical users benefit just as much. Data scientists can use Python or R for advanced modeling, while engineers and developers connect KNIME to databases, cloud systems, and APIs. The logic behind every step remains visible and traceable, which keeps solutions maintainable and easy to review.

Teams gain the most from this combination. Because workflows are transparent and reproducible, one person’s work can be understood and trusted by others. This makes KNIME ideal for environments where clarity and accountability matter, such as auditing, regulatory work, and quality assurance.

In short, KNIME is for anyone who needs reliable, transparent, and repeatable data work, from beginners building their first workflows to experts delivering enterprise level solutions.

If you want to explore what else is possible, we recommend visiting the official KNIME website. It is a great way to see the platform’s full capabilities and discover additional resources.

Want help rolling this out to your team?

We run KNIME training specifically for finance and audit teams using your processes, not toy examples. Get in touch to talk about a short pilot or a training cohort.

1. Introducing the KNIME Analytics Platform

Who this guide is for

Finance and audit teams who are:

– Still using large, fragile spreadsheets for recurring tasks

– New to KNIME and want a simple, practical starting pointWhat you’ll be able to do after this guide

– Install KNIME and understand the main screen

– Build a first workflow that replaces a spreadsheet task

– Know where to look for logs, errors, and performance issues

If you’re brand new to KNIME: Do sections 1–4, then jump to 7 to build your first workflow.

If KNIME is already installed: Skim 1, then go to 3, 4 and 7.

The Transition from Spreadsheets to Workflow Automation

If you're still doing monthly reporting in Excel, you already know the problems: formulas break when someone adds a row, version control is a nightmare, and good luck explaining to an auditor exactly how you got from A to B.

KNIME fixes this. It's a free, visual workflow tool that turns your manual spreadsheet processes into documented, repeatable automations. Every step is visible. Every calculation is traceable. And when next month arrives, you press one button instead of rebuilding from scratch.

We've trained hundreds of finance and audit professionals on KNIME. This guide covers what you need to get started.

Figure 1

What is the KNIME Analytics Platform?

The KNIME Analytics Platform is an open-source, visual environment for building data workflows. Instead of writing long scripts or typing endless little commands, you connect nodes, which are small functional blocks that let you prepare data, analyse it, model it, and even deploy it.

In simpler terms: think of KNIME as a canvas where you drag, drop, and connect steps that transform your data from raw to meaningful. It brings together what many people normally do in spreadsheets, SQL, Python, and BI tools, and puts it all in one place in a visual and easy-to-follow workspace.

What it can do:

Read data from almost any source (files, databases, APIs, cloud services).

Clean, reshape, and validate data with no-code or low-code logic.

Build machine learning models and evaluate them.

Automate repetitive data tasks.

Produce reports, dashboards, and services others can reuse.

Why does KNIME matter?

Data work can be messy, scattered, and slow. It often ends up locked inside spreadsheets or scripts that only one person truly understands.KNIME exists to make analytics transparent, repeatable, and shareable for anyone who works with data.

From our training sessions: The audit trail benefit is undersold. When we train internal audit teams, the first "aha moment" is usually when they realise they can show exactly how a number was calculated. Excel hides that logic across cell formulas in multiple tabs. KNIME makes it visible in one workflow.

Key reasons people choose KNIME:

No-code or low-code accessibility: You do not need to be a developer to build powerful workflows. KNIME lets you create solutions visually, step by step.

End-to-end coverage: From data preparation and blending to modelling, reporting, and deployment, everything happens within a single workflow that you can see and understand.

Open and extendable: KNIME connects seamlessly with Python, R, SQL, cloud platforms, and modern machine learning libraries. It works with the tools you already use.

Reusability: Once a workflow is built, it can be run again with new data and the same logic. There is no need to recreate work.

Auditability and trust: Every action is visible, documented, and traceable. This creates confidence in both the process and the results.

Why organisations love it: KNIME reduces manual tasks, lowers the risk of errors, and helps teams share knowledge rather than keeping it hidden inside siloed tools. It brings clarity and structure to data work, which leads to better decisions and more reliable outcomes.

When should you use the KNIME Analytics Platform?

You can think of KNIME as your go-to tool whenever you're fed up wrestling with the same spreadsheet that you are using for semi-automated data processing (like reconciliations) and want to get rid of the errors from all those manual cut and paste and interventions.

Great use cases

When your data comes from several sources and needs to be combined in a reliable way, KNIME becomes a helpful choice. It is also useful when spreadsheets start to grow too large, too slow, or too fragile to manage. If you find yourself repeating the same tasks, such as monthly reporting or routine data preparation, KNIME helps automate these steps. It also provides a clear and transparent flow of logic that you can easily understand or share with others. When you want to explore machine learning without diving into a full coding environment, KNIME offers a practical starting point. And when you need to share experiments, prototypes, or proofs of concept quickly, KNIME makes that process simple and straightforward.

Typical workflow moments

Workflow Stage | How KNIME Helps |

|---|---|

Start of a project | Explore messy or unfamiliar data, test ideas, and build understanding before committing to deeper analysis. |

Daily or weekly cycles | Clean, validate, and distribute information consistently, reducing repeated manual work. |

Advanced analytics | Build predictive models, apply algorithms, evaluate results, and keep everything documented in a clear workflow. |

Operationalisation | Move analytics into production, integrate with enterprise systems, and automate processes for reliable long-term use. |

Where can KNIME be used?

KNIME fits naturally wherever data needs to be understood, cleaned, combined, or transformed. It is not tied to a single industry or a specific type of system. Instead, it adapts to the environment you already work in. Many people begin by installing KNIME on a laptop and using it as their personal analysis space. From there, it scales comfortably into larger ecosystems such as on-premise databases, cloud data warehouses, or secure enterprise platforms. The experience remains consistent, since you build workflows visually regardless of where the data resides.

Across industries, the tasks may vary, but the underlying challenges are often the same. A financial analyst might use KNIME to reconcile ledgers across multiple systems, while a marketer uses it to bring together campaign metrics from several platforms. Manufacturing teams blend sensor readings with quality data. Healthcare teams process clinical records. Retailers explore sales patterns. The subject matter may change, but the need for a clear and repeatable way to work with information stays constant.

KNIME is equally flexible in technical environments. It can read from a simple local file on your machine, but it also connects to cloud storage, enterprise applications, and APIs when the situation calls for it. And when teams need automation or collaboration, workflows can move easily from a local computer to a KNIME Business Hub, where they can run on a schedule, power dashboards, or act as shared tools for the wider organisation. In other words, KNIME works wherever your data lives, whether that is a single spreadsheet or a fully equipped enterprise infrastructure.

Who can use KNIME?

KNIME is designed for anyone who works with data, regardless of skill level. Beginners appreciate its visual, step by step approach, which makes analysis easy to learn and encourages confidence without needing to write code. Tasks that feel familiar in spreadsheets become clear visual actions in a workflow.

Technical users benefit just as much. Data scientists can use Python or R for advanced modeling, while engineers and developers connect KNIME to databases, cloud systems, and APIs. The logic behind every step remains visible and traceable, which keeps solutions maintainable and easy to review.

Teams gain the most from this combination. Because workflows are transparent and reproducible, one person’s work can be understood and trusted by others. This makes KNIME ideal for environments where clarity and accountability matter, such as auditing, regulatory work, and quality assurance.

In short, KNIME is for anyone who needs reliable, transparent, and repeatable data work, from beginners building their first workflows to experts delivering enterprise level solutions.

If you want to explore what else is possible, we recommend visiting the official KNIME website. It is a great way to see the platform’s full capabilities and discover additional resources.

Want help rolling this out to your team?

We run KNIME training specifically for finance and audit teams using your processes, not toy examples. Get in touch to talk about a short pilot or a training cohort.

1. Introducing the KNIME Analytics Platform

Who this guide is for

Finance and audit teams who are:

– Still using large, fragile spreadsheets for recurring tasks

– New to KNIME and want a simple, practical starting pointWhat you’ll be able to do after this guide

– Install KNIME and understand the main screen

– Build a first workflow that replaces a spreadsheet task

– Know where to look for logs, errors, and performance issues

If you’re brand new to KNIME: Do sections 1–4, then jump to 7 to build your first workflow.

If KNIME is already installed: Skim 1, then go to 3, 4 and 7.

The Transition from Spreadsheets to Workflow Automation

If you're still doing monthly reporting in Excel, you already know the problems: formulas break when someone adds a row, version control is a nightmare, and good luck explaining to an auditor exactly how you got from A to B.

KNIME fixes this. It's a free, visual workflow tool that turns your manual spreadsheet processes into documented, repeatable automations. Every step is visible. Every calculation is traceable. And when next month arrives, you press one button instead of rebuilding from scratch.

We've trained hundreds of finance and audit professionals on KNIME. This guide covers what you need to get started.

Figure 1

What is the KNIME Analytics Platform?

The KNIME Analytics Platform is an open-source, visual environment for building data workflows. Instead of writing long scripts or typing endless little commands, you connect nodes, which are small functional blocks that let you prepare data, analyse it, model it, and even deploy it.

In simpler terms: think of KNIME as a canvas where you drag, drop, and connect steps that transform your data from raw to meaningful. It brings together what many people normally do in spreadsheets, SQL, Python, and BI tools, and puts it all in one place in a visual and easy-to-follow workspace.

What it can do:

Read data from almost any source (files, databases, APIs, cloud services).

Clean, reshape, and validate data with no-code or low-code logic.

Build machine learning models and evaluate them.

Automate repetitive data tasks.

Produce reports, dashboards, and services others can reuse.

Why does KNIME matter?

Data work can be messy, scattered, and slow. It often ends up locked inside spreadsheets or scripts that only one person truly understands.KNIME exists to make analytics transparent, repeatable, and shareable for anyone who works with data.

From our training sessions: The audit trail benefit is undersold. When we train internal audit teams, the first "aha moment" is usually when they realise they can show exactly how a number was calculated. Excel hides that logic across cell formulas in multiple tabs. KNIME makes it visible in one workflow.

Key reasons people choose KNIME:

No-code or low-code accessibility: You do not need to be a developer to build powerful workflows. KNIME lets you create solutions visually, step by step.

End-to-end coverage: From data preparation and blending to modelling, reporting, and deployment, everything happens within a single workflow that you can see and understand.

Open and extendable: KNIME connects seamlessly with Python, R, SQL, cloud platforms, and modern machine learning libraries. It works with the tools you already use.

Reusability: Once a workflow is built, it can be run again with new data and the same logic. There is no need to recreate work.

Auditability and trust: Every action is visible, documented, and traceable. This creates confidence in both the process and the results.

Why organisations love it: KNIME reduces manual tasks, lowers the risk of errors, and helps teams share knowledge rather than keeping it hidden inside siloed tools. It brings clarity and structure to data work, which leads to better decisions and more reliable outcomes.

When should you use the KNIME Analytics Platform?

You can think of KNIME as your go-to tool whenever you're fed up wrestling with the same spreadsheet that you are using for semi-automated data processing (like reconciliations) and want to get rid of the errors from all those manual cut and paste and interventions.

Great use cases

When your data comes from several sources and needs to be combined in a reliable way, KNIME becomes a helpful choice. It is also useful when spreadsheets start to grow too large, too slow, or too fragile to manage. If you find yourself repeating the same tasks, such as monthly reporting or routine data preparation, KNIME helps automate these steps. It also provides a clear and transparent flow of logic that you can easily understand or share with others. When you want to explore machine learning without diving into a full coding environment, KNIME offers a practical starting point. And when you need to share experiments, prototypes, or proofs of concept quickly, KNIME makes that process simple and straightforward.

Typical workflow moments

Workflow Stage | How KNIME Helps |

|---|---|

Start of a project | Explore messy or unfamiliar data, test ideas, and build understanding before committing to deeper analysis. |

Daily or weekly cycles | Clean, validate, and distribute information consistently, reducing repeated manual work. |

Advanced analytics | Build predictive models, apply algorithms, evaluate results, and keep everything documented in a clear workflow. |

Operationalisation | Move analytics into production, integrate with enterprise systems, and automate processes for reliable long-term use. |

Where can KNIME be used?

KNIME fits naturally wherever data needs to be understood, cleaned, combined, or transformed. It is not tied to a single industry or a specific type of system. Instead, it adapts to the environment you already work in. Many people begin by installing KNIME on a laptop and using it as their personal analysis space. From there, it scales comfortably into larger ecosystems such as on-premise databases, cloud data warehouses, or secure enterprise platforms. The experience remains consistent, since you build workflows visually regardless of where the data resides.

Across industries, the tasks may vary, but the underlying challenges are often the same. A financial analyst might use KNIME to reconcile ledgers across multiple systems, while a marketer uses it to bring together campaign metrics from several platforms. Manufacturing teams blend sensor readings with quality data. Healthcare teams process clinical records. Retailers explore sales patterns. The subject matter may change, but the need for a clear and repeatable way to work with information stays constant.

KNIME is equally flexible in technical environments. It can read from a simple local file on your machine, but it also connects to cloud storage, enterprise applications, and APIs when the situation calls for it. And when teams need automation or collaboration, workflows can move easily from a local computer to a KNIME Business Hub, where they can run on a schedule, power dashboards, or act as shared tools for the wider organisation. In other words, KNIME works wherever your data lives, whether that is a single spreadsheet or a fully equipped enterprise infrastructure.

Who can use KNIME?

KNIME is designed for anyone who works with data, regardless of skill level. Beginners appreciate its visual, step by step approach, which makes analysis easy to learn and encourages confidence without needing to write code. Tasks that feel familiar in spreadsheets become clear visual actions in a workflow.

Technical users benefit just as much. Data scientists can use Python or R for advanced modeling, while engineers and developers connect KNIME to databases, cloud systems, and APIs. The logic behind every step remains visible and traceable, which keeps solutions maintainable and easy to review.

Teams gain the most from this combination. Because workflows are transparent and reproducible, one person’s work can be understood and trusted by others. This makes KNIME ideal for environments where clarity and accountability matter, such as auditing, regulatory work, and quality assurance.

In short, KNIME is for anyone who needs reliable, transparent, and repeatable data work, from beginners building their first workflows to experts delivering enterprise level solutions.

If you want to explore what else is possible, we recommend visiting the official KNIME website. It is a great way to see the platform’s full capabilities and discover additional resources.

Want help rolling this out to your team?

We run KNIME training specifically for finance and audit teams using your processes, not toy examples. Get in touch to talk about a short pilot or a training cohort.

2. Installing the KNIME Analytics Platform

Before you begin building workflows or exploring data, you will need a working version of the KNIME Analytics Platform on your machine. Fortunately, the installation process is simple, and most users, whether they work in IT, analytics, audit, or business operations, can complete it without assistance. This chapter guides you through the steps in a clear and steady manner, so you know exactly what to expect from the moment you download KNIME to the moment it opens for the first time.

If you’re on a locked-down corporate laptop, you may need IT to install KNIME for you. In that case, send them this section or point them to the KNIME installation page.

Getting KNIME onto your computer is a simple process. You download it, install it, open it, and begin working. This chapter walks you through those steps in a clean, straightforward flow, without assuming any technical background. Follow the steps below:

Go to the KNIME Analytics Platform Download Page. In here, you can register to receive helpful updates and tips, or simply accept the terms and conditions and directly head on to the available installation packages.

Figure 2.1 — Download Landing Page

Choose the release line that best fits your needs. KNIME provides two options. The Standard Release is updated every 6 weeks and includes the newest features, which makes it a good choice for most users. The Long Term Support Release is updated less often and is ideal for teams that prefer maximum stability. You can find a full explanation of the differences in the release notes FAQ.

Figure 2.2 — Releases

Scroll down to the section for your operating system (Windows, Linux, macOS).

Figure 2.3 — OS Installation Packages

Click the installer type that best fits your needs to start the download:

Windows options:

Installer: Extracts files, adds a desktop icon, and suggests memory settings.

Self-extracting archive: Creates a folder with the installation files, no extra software required.

Zip archive: Can be downloaded and extracted anywhere you have full access rights.

Once the file is on your machine, run the installer.

On Windows, open the .exe file and follow the standard installation prompts.

On macOS, open the .dmg file and drag KNIME into your Applications folder.

On Linux, extract the downloaded archive and place the folder wherever you prefer.

The installation process is routine; most users finish in a couple of minutes.

Launch KNIME from your applications list. The first thing KNIME asks for is a workspace. A workspace is a folder where your workflows and settings will live. You can accept the default option or choose your own. Once selected, KNIME opens and presents a clean canvas ready for your first workflow.

Figure 2.4 — Workspace Preview upon Launch

Advanced Options (You Can Skip This on Day One!)

As your work grows, you may want to add extra capabilities such as Python integration, database tools, machine learning libraries, or cloud connectors. To install these, open the Help menu and select Install Extensions. Choose what you need and KNIME will set it up for you. You can add extensions whenever you like, so there is no need to install everything at the start.

Figure 2.5 — Install Extensions under the Menu option

If you work with large datasets, you may want to increase KNIME’s memory limit. This is done by editing a small configuration file called knime.ini located in the installation directory. Raising the value of the -Xmx setting allows KNIME to use more memory. For many users, this step isn’t necessary at the beginning, but it’s helpful to know where it lives.

With installation complete, you now have a fully capable analytics environment on your machine. You can begin creating workflows, explore sample data, or try rebuilding tasks from your daily work. KNIME is ready as soon as it opens, with no extra setup, no activation, and no hidden steps.

2. Installing the KNIME Analytics Platform

Before you begin building workflows or exploring data, you will need a working version of the KNIME Analytics Platform on your machine. Fortunately, the installation process is simple, and most users, whether they work in IT, analytics, audit, or business operations, can complete it without assistance. This chapter guides you through the steps in a clear and steady manner, so you know exactly what to expect from the moment you download KNIME to the moment it opens for the first time.

If you’re on a locked-down corporate laptop, you may need IT to install KNIME for you. In that case, send them this section or point them to the KNIME installation page.

Getting KNIME onto your computer is a simple process. You download it, install it, open it, and begin working. This chapter walks you through those steps in a clean, straightforward flow, without assuming any technical background. Follow the steps below:

Go to the KNIME Analytics Platform Download Page. In here, you can register to receive helpful updates and tips, or simply accept the terms and conditions and directly head on to the available installation packages.

Figure 2.1 — Download Landing Page

Choose the release line that best fits your needs. KNIME provides two options. The Standard Release is updated every 6 weeks and includes the newest features, which makes it a good choice for most users. The Long Term Support Release is updated less often and is ideal for teams that prefer maximum stability. You can find a full explanation of the differences in the release notes FAQ.

Figure 2.2 — Releases

Scroll down to the section for your operating system (Windows, Linux, macOS).

Figure 2.3 — OS Installation Packages

Click the installer type that best fits your needs to start the download:

Windows options:

Installer: Extracts files, adds a desktop icon, and suggests memory settings.

Self-extracting archive: Creates a folder with the installation files, no extra software required.

Zip archive: Can be downloaded and extracted anywhere you have full access rights.

Once the file is on your machine, run the installer.

On Windows, open the .exe file and follow the standard installation prompts.

On macOS, open the .dmg file and drag KNIME into your Applications folder.

On Linux, extract the downloaded archive and place the folder wherever you prefer.

The installation process is routine; most users finish in a couple of minutes.

Launch KNIME from your applications list. The first thing KNIME asks for is a workspace. A workspace is a folder where your workflows and settings will live. You can accept the default option or choose your own. Once selected, KNIME opens and presents a clean canvas ready for your first workflow.

Figure 2.4 — Workspace Preview upon Launch

Advanced Options (You Can Skip This on Day One!)

As your work grows, you may want to add extra capabilities such as Python integration, database tools, machine learning libraries, or cloud connectors. To install these, open the Help menu and select Install Extensions. Choose what you need and KNIME will set it up for you. You can add extensions whenever you like, so there is no need to install everything at the start.

Figure 2.5 — Install Extensions under the Menu option

If you work with large datasets, you may want to increase KNIME’s memory limit. This is done by editing a small configuration file called knime.ini located in the installation directory. Raising the value of the -Xmx setting allows KNIME to use more memory. For many users, this step isn’t necessary at the beginning, but it’s helpful to know where it lives.

With installation complete, you now have a fully capable analytics environment on your machine. You can begin creating workflows, explore sample data, or try rebuilding tasks from your daily work. KNIME is ready as soon as it opens, with no extra setup, no activation, and no hidden steps.

2. Installing the KNIME Analytics Platform

Before you begin building workflows or exploring data, you will need a working version of the KNIME Analytics Platform on your machine. Fortunately, the installation process is simple, and most users, whether they work in IT, analytics, audit, or business operations, can complete it without assistance. This chapter guides you through the steps in a clear and steady manner, so you know exactly what to expect from the moment you download KNIME to the moment it opens for the first time.

If you’re on a locked-down corporate laptop, you may need IT to install KNIME for you. In that case, send them this section or point them to the KNIME installation page.

Getting KNIME onto your computer is a simple process. You download it, install it, open it, and begin working. This chapter walks you through those steps in a clean, straightforward flow, without assuming any technical background. Follow the steps below:

Go to the KNIME Analytics Platform Download Page. In here, you can register to receive helpful updates and tips, or simply accept the terms and conditions and directly head on to the available installation packages.

Figure 2.1 — Download Landing Page

Choose the release line that best fits your needs. KNIME provides two options. The Standard Release is updated every 6 weeks and includes the newest features, which makes it a good choice for most users. The Long Term Support Release is updated less often and is ideal for teams that prefer maximum stability. You can find a full explanation of the differences in the release notes FAQ.

Figure 2.2 — Releases

Scroll down to the section for your operating system (Windows, Linux, macOS).

Figure 2.3 — OS Installation Packages

Click the installer type that best fits your needs to start the download:

Windows options:

Installer: Extracts files, adds a desktop icon, and suggests memory settings.

Self-extracting archive: Creates a folder with the installation files, no extra software required.

Zip archive: Can be downloaded and extracted anywhere you have full access rights.

Once the file is on your machine, run the installer.

On Windows, open the .exe file and follow the standard installation prompts.

On macOS, open the .dmg file and drag KNIME into your Applications folder.

On Linux, extract the downloaded archive and place the folder wherever you prefer.

The installation process is routine; most users finish in a couple of minutes.

Launch KNIME from your applications list. The first thing KNIME asks for is a workspace. A workspace is a folder where your workflows and settings will live. You can accept the default option or choose your own. Once selected, KNIME opens and presents a clean canvas ready for your first workflow.

Figure 2.4 — Workspace Preview upon Launch

Advanced Options (You Can Skip This on Day One!)

As your work grows, you may want to add extra capabilities such as Python integration, database tools, machine learning libraries, or cloud connectors. To install these, open the Help menu and select Install Extensions. Choose what you need and KNIME will set it up for you. You can add extensions whenever you like, so there is no need to install everything at the start.

Figure 2.5 — Install Extensions under the Menu option

If you work with large datasets, you may want to increase KNIME’s memory limit. This is done by editing a small configuration file called knime.ini located in the installation directory. Raising the value of the -Xmx setting allows KNIME to use more memory. For many users, this step isn’t necessary at the beginning, but it’s helpful to know where it lives.

With installation complete, you now have a fully capable analytics environment on your machine. You can begin creating workflows, explore sample data, or try rebuilding tasks from your daily work. KNIME is ready as soon as it opens, with no extra setup, no activation, and no hidden steps.

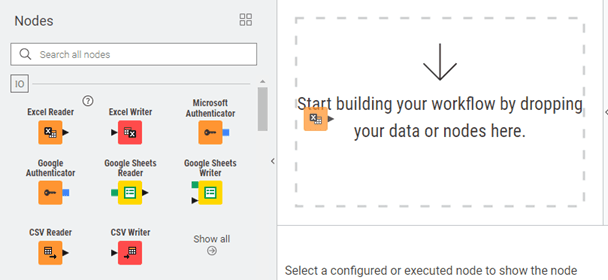

3. A Quick Tour of the KNIME Interface

Once KNIME is installed and opened, the first thing you see is the user interface, often called the UI. It is the main workspace where you build workflows, inspect data, and interact with every part of the platform. Even if you are new to KNIME, the layout is easy to learn because each area of the screen has a clear purpose.

This chapter introduces the major parts of the interface so that you know exactly what you are looking at when you begin working.

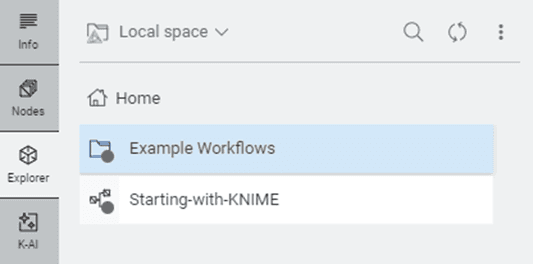

Figure 3.1 — KNIME Modern UI General Layout - application tabs, side panel, workflow editor and node monitor

Application Tabs

The row of tabs at the top of the window. Each tab represents either the entry page or an open workflow. It helps you move between multiple workflows without losing your place. Just like switching between browser tabs, you can jump from one workflow to another instantly. Click a tab to bring that workflow into focus. Close tabs when you are finished. This makes it easy to compare workflows side by side or keep reference workflows open while working on a new one.

Side Panel Navigation

The left side of the screen is your central navigation hub. It contains multiple panels that help you explore nodes, understand workflows, and manage files. It helps you understand what the workflow or component contains, what it is meant to do, and any documentation provided. It contains additional tabs for:

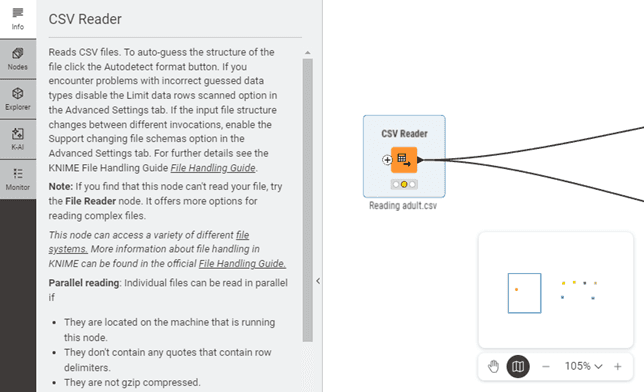

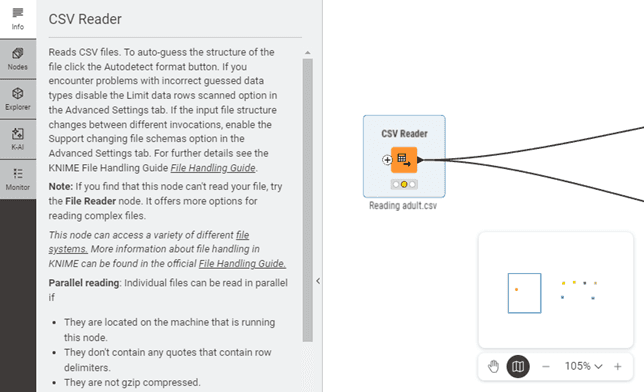

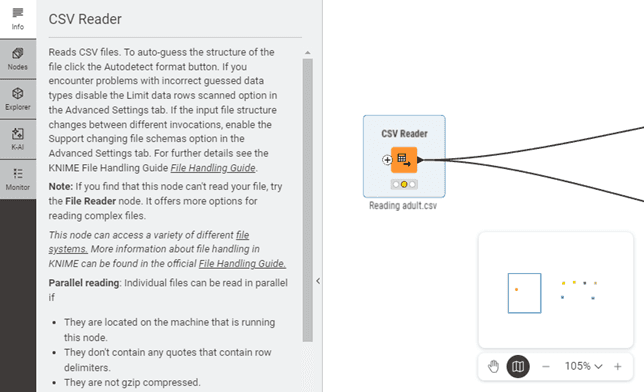

Description (Info) A panel that shows information about the currently selected workflow or component. It helps you understand what the workflow or component contains, what it is meant to do, and any documentation the creator has provided.

Node Repository (Nodes) - A list of all available nodes in the KNIME Analytics Platform. This is where you pick the building blocks for your workflow. Each node represents a specific task such as filtering data, reading a file, training a model, or writing to a database.

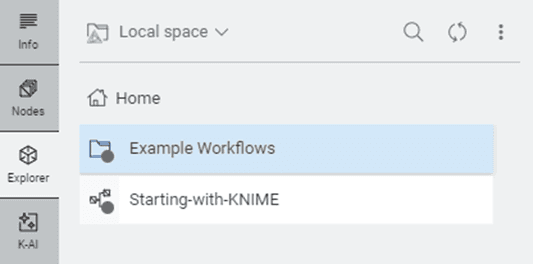

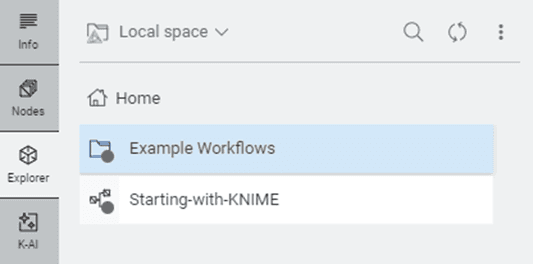

Space Explorer (Explorer) - A file browser that lets you navigate your KNIME Hub spaces or your local KNIME workspaces. It helps you open, organize, and manage workflows, components, data files, and KNIME Hub content in one place.

Workflow Monitor (Monitor) - A panel that keeps track of the health of your workflow. It helps you see which nodes have errors or warnings. This is especially useful when debugging a workflow or trying to find the step where something went wrong.

Note: K-AI is the AI assistant built into KNIME Analytics Platform. It serves two primary roles: first as a conversational assistant (Q&A mode) and second as a workflow-building partner (Build mode). You don’t need K-AI to follow this guide; we’ll build everything manually so you understand what’s happening.

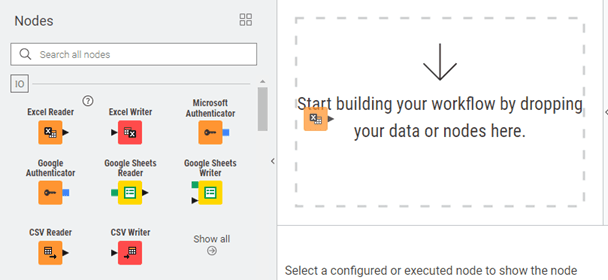

Workflow Editor

The large central canvas where your workflow is built. It displays your nodes and the connections between them. This is where you design, structure, and execute your entire analytical process.

Help, Preferences, and Menu

A set of menus located at the upper-right area of the interface. It gives you access to help resources, extension installation, application preferences, themes, update settings, and configuration options.

Node Monitor

A panel that displays the output of the currently selected node. It lets you see what your data looks like at each step. You can inspect tables, check flow variable values, and review intermediate results without opening full data views.

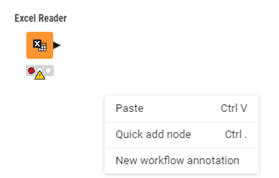

Figure 3.2 — User interface elements — workflow toolbar, node action bar, rename components and metanodes

Workflow Toolbar

A compact toolbar at the top-left area of the workflow canvas. It provides quick access to common workflow actions. This includes saving, undoing, redoing, and other commands that apply to the workflow as a whole or to the node you have selected.

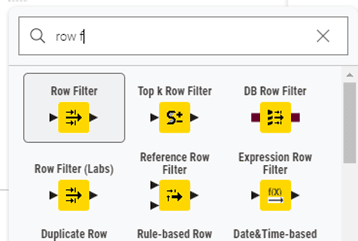

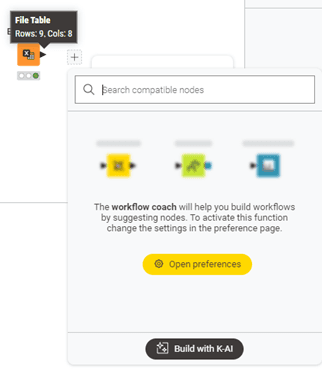

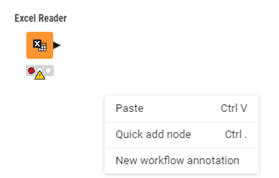

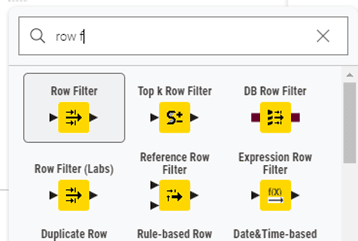

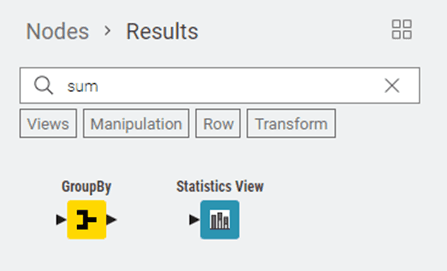

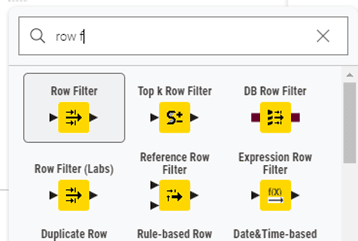

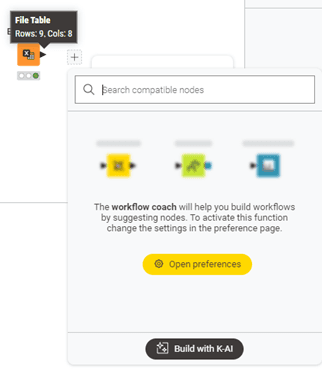

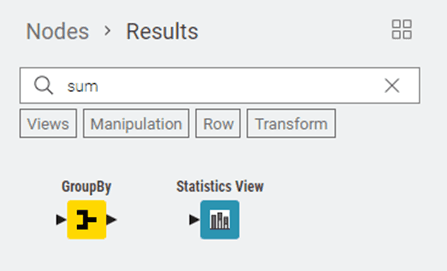

Node Repository Result

A filtered list of nodes shown inside the Side Panel after you search in the Node Repository. It displays only the nodes that match your search term or the categories you selected. This makes it much easier to find exactly what you need without scrolling through the entire repository.

Rename

A feature that allows you to rename metanodes and components directly on the workflow canvas. It helps you keep your workflow clean and understandable. Naming larger structures is important because it shows others what the node contains and what role it plays in your process.

Zoom Controls

A set of controls that allow you to zoom in, zoom out, or fit the workflow to your screen. Zooming helps you navigate both large and small workflows. It allows you to move from high-level overviews to detailed node-level adjustments without losing your place.

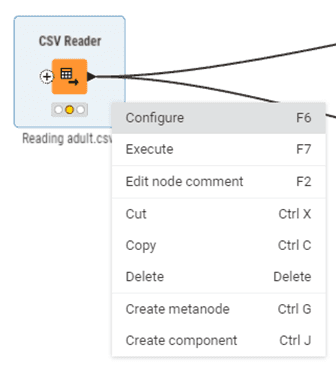

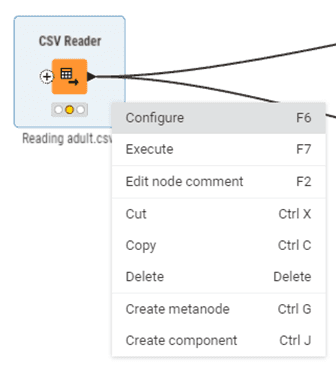

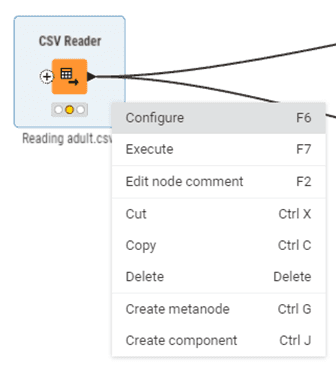

Node Action Bar

A contextual bar that appears next to a selected node. It gives you direct access to node-specific actions such as configuring the node, executing it, resetting it, or opening its view.

Try it now: Open KNIME, create a new empty workflow, click a node in the Node Repository and drag it on to the canvas. Watch how the Node Monitor updates when you select different nodes.

3. A Quick Tour of the KNIME Interface

Once KNIME is installed and opened, the first thing you see is the user interface, often called the UI. It is the main workspace where you build workflows, inspect data, and interact with every part of the platform. Even if you are new to KNIME, the layout is easy to learn because each area of the screen has a clear purpose.

This chapter introduces the major parts of the interface so that you know exactly what you are looking at when you begin working.

Figure 3.1 — KNIME Modern UI General Layout - application tabs, side panel, workflow editor and node monitor

Application Tabs

The row of tabs at the top of the window. Each tab represents either the entry page or an open workflow. It helps you move between multiple workflows without losing your place. Just like switching between browser tabs, you can jump from one workflow to another instantly. Click a tab to bring that workflow into focus. Close tabs when you are finished. This makes it easy to compare workflows side by side or keep reference workflows open while working on a new one.

Side Panel Navigation

The left side of the screen is your central navigation hub. It contains multiple panels that help you explore nodes, understand workflows, and manage files. It helps you understand what the workflow or component contains, what it is meant to do, and any documentation provided. It contains additional tabs for:

Description (Info) A panel that shows information about the currently selected workflow or component. It helps you understand what the workflow or component contains, what it is meant to do, and any documentation the creator has provided.

Node Repository (Nodes) - A list of all available nodes in the KNIME Analytics Platform. This is where you pick the building blocks for your workflow. Each node represents a specific task such as filtering data, reading a file, training a model, or writing to a database.

Space Explorer (Explorer) - A file browser that lets you navigate your KNIME Hub spaces or your local KNIME workspaces. It helps you open, organize, and manage workflows, components, data files, and KNIME Hub content in one place.

Workflow Monitor (Monitor) - A panel that keeps track of the health of your workflow. It helps you see which nodes have errors or warnings. This is especially useful when debugging a workflow or trying to find the step where something went wrong.

Note: K-AI is the AI assistant built into KNIME Analytics Platform. It serves two primary roles: first as a conversational assistant (Q&A mode) and second as a workflow-building partner (Build mode). You don’t need K-AI to follow this guide; we’ll build everything manually so you understand what’s happening.

Workflow Editor

The large central canvas where your workflow is built. It displays your nodes and the connections between them. This is where you design, structure, and execute your entire analytical process.

Help, Preferences, and Menu

A set of menus located at the upper-right area of the interface. It gives you access to help resources, extension installation, application preferences, themes, update settings, and configuration options.

Node Monitor

A panel that displays the output of the currently selected node. It lets you see what your data looks like at each step. You can inspect tables, check flow variable values, and review intermediate results without opening full data views.

Figure 3.2 — User interface elements — workflow toolbar, node action bar, rename components and metanodes

Workflow Toolbar

A compact toolbar at the top-left area of the workflow canvas. It provides quick access to common workflow actions. This includes saving, undoing, redoing, and other commands that apply to the workflow as a whole or to the node you have selected.

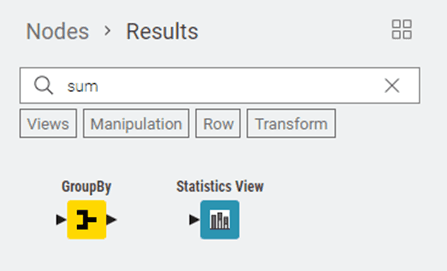

Node Repository Result

A filtered list of nodes shown inside the Side Panel after you search in the Node Repository. It displays only the nodes that match your search term or the categories you selected. This makes it much easier to find exactly what you need without scrolling through the entire repository.

Rename

A feature that allows you to rename metanodes and components directly on the workflow canvas. It helps you keep your workflow clean and understandable. Naming larger structures is important because it shows others what the node contains and what role it plays in your process.

Zoom Controls

A set of controls that allow you to zoom in, zoom out, or fit the workflow to your screen. Zooming helps you navigate both large and small workflows. It allows you to move from high-level overviews to detailed node-level adjustments without losing your place.

Node Action Bar

A contextual bar that appears next to a selected node. It gives you direct access to node-specific actions such as configuring the node, executing it, resetting it, or opening its view.

Try it now: Open KNIME, create a new empty workflow, click a node in the Node Repository and drag it on to the canvas. Watch how the Node Monitor updates when you select different nodes.

3. A Quick Tour of the KNIME Interface

Once KNIME is installed and opened, the first thing you see is the user interface, often called the UI. It is the main workspace where you build workflows, inspect data, and interact with every part of the platform. Even if you are new to KNIME, the layout is easy to learn because each area of the screen has a clear purpose.

This chapter introduces the major parts of the interface so that you know exactly what you are looking at when you begin working.

Figure 3.1 — KNIME Modern UI General Layout - application tabs, side panel, workflow editor and node monitor

Application Tabs

The row of tabs at the top of the window. Each tab represents either the entry page or an open workflow. It helps you move between multiple workflows without losing your place. Just like switching between browser tabs, you can jump from one workflow to another instantly. Click a tab to bring that workflow into focus. Close tabs when you are finished. This makes it easy to compare workflows side by side or keep reference workflows open while working on a new one.

Side Panel Navigation

The left side of the screen is your central navigation hub. It contains multiple panels that help you explore nodes, understand workflows, and manage files. It helps you understand what the workflow or component contains, what it is meant to do, and any documentation provided. It contains additional tabs for:

Description (Info) A panel that shows information about the currently selected workflow or component. It helps you understand what the workflow or component contains, what it is meant to do, and any documentation the creator has provided.

Node Repository (Nodes) - A list of all available nodes in the KNIME Analytics Platform. This is where you pick the building blocks for your workflow. Each node represents a specific task such as filtering data, reading a file, training a model, or writing to a database.

Space Explorer (Explorer) - A file browser that lets you navigate your KNIME Hub spaces or your local KNIME workspaces. It helps you open, organize, and manage workflows, components, data files, and KNIME Hub content in one place.

Workflow Monitor (Monitor) - A panel that keeps track of the health of your workflow. It helps you see which nodes have errors or warnings. This is especially useful when debugging a workflow or trying to find the step where something went wrong.

Note: K-AI is the AI assistant built into KNIME Analytics Platform. It serves two primary roles: first as a conversational assistant (Q&A mode) and second as a workflow-building partner (Build mode). You don’t need K-AI to follow this guide; we’ll build everything manually so you understand what’s happening.

Workflow Editor

The large central canvas where your workflow is built. It displays your nodes and the connections between them. This is where you design, structure, and execute your entire analytical process.

Help, Preferences, and Menu

A set of menus located at the upper-right area of the interface. It gives you access to help resources, extension installation, application preferences, themes, update settings, and configuration options.

Node Monitor

A panel that displays the output of the currently selected node. It lets you see what your data looks like at each step. You can inspect tables, check flow variable values, and review intermediate results without opening full data views.

Figure 3.2 — User interface elements — workflow toolbar, node action bar, rename components and metanodes

Workflow Toolbar

A compact toolbar at the top-left area of the workflow canvas. It provides quick access to common workflow actions. This includes saving, undoing, redoing, and other commands that apply to the workflow as a whole or to the node you have selected.

Node Repository Result

A filtered list of nodes shown inside the Side Panel after you search in the Node Repository. It displays only the nodes that match your search term or the categories you selected. This makes it much easier to find exactly what you need without scrolling through the entire repository.

Rename

A feature that allows you to rename metanodes and components directly on the workflow canvas. It helps you keep your workflow clean and understandable. Naming larger structures is important because it shows others what the node contains and what role it plays in your process.

Zoom Controls

A set of controls that allow you to zoom in, zoom out, or fit the workflow to your screen. Zooming helps you navigate both large and small workflows. It allows you to move from high-level overviews to detailed node-level adjustments without losing your place.

Node Action Bar

A contextual bar that appears next to a selected node. It gives you direct access to node-specific actions such as configuring the node, executing it, resetting it, or opening its view.

Try it now: Open KNIME, create a new empty workflow, click a node in the Node Repository and drag it on to the canvas. Watch how the Node Monitor updates when you select different nodes.

4. The Workflow Editor: Your Main Canvas

The Workflow Editor is your digital workbench. It is the central canvas where financial data transformation comes to life through visual programming. Unlike Excel where logic hides inside cell formulas, the Workflow Editor displays every step of your analysis process as a connected flow of nodes, making your methodology transparent, auditable, and reproducible.

When you create or open a workflow, the Workflow Editor occupies the large central area of the KNIME interface. This blank canvas is where you'll drag nodes from the Node Repository, configure them for your specific financial tasks, and connect them to create automated data processing pipelines. The canvas provides an infinite workspace that automatically expands as you add more nodes, allowing you to build workflows of any size and complexity.

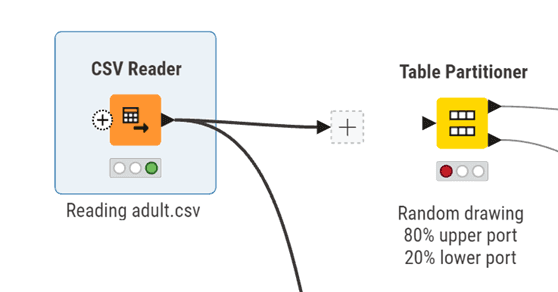

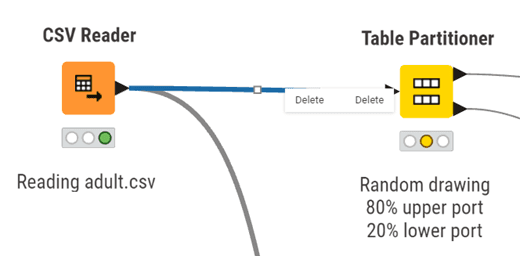

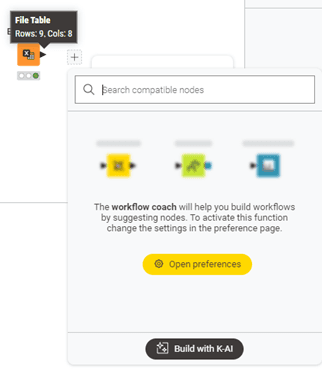

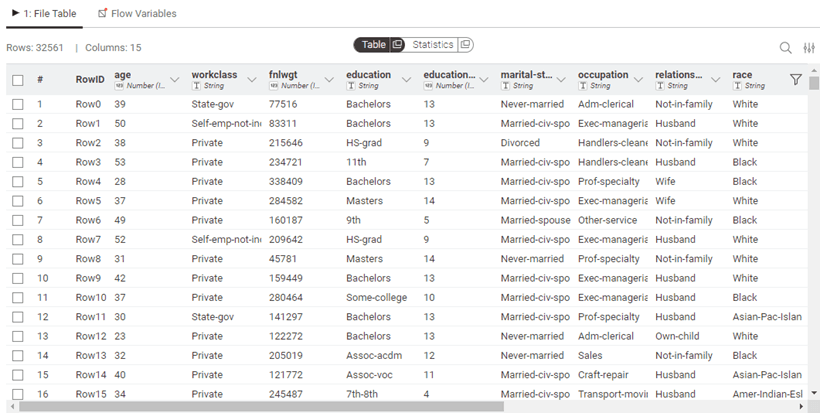

Figure 4.1 — Workflow Editor elements — node description panel, workflow canvas, port outputs, and node execution

Panning Across the Canvas

To move around your workflow, simply click and hold on any empty area of the canvas, then drag in any direction. This works just like moving a map around on your screen. Your mouse cursor changes to a hand icon when you're in panning mode, giving you visual feedback that you're moving the view rather than selecting nodes. This becomes particularly useful when building comprehensive financial processes that extend beyond your visible screen area.

Zooming In and Out

The Zoom Controls appear in the bottom-right corner of the Workflow Editor. Click the plus (+) button to zoom in for detailed work, or the minus (−) button to zoom out to see your entire workflow. You can also use your mouse scroll wheel to scroll up to zoom in, scroll down to zoom out. The "Fit to Screen" button (usually represented by a rectangle icon) automatically adjusts the zoom level so your entire workflow fits in the visible area.

For keyboard users, Ctrl+0 (Windows/Linux) or Cmd+0 (macOS) instantly fits your entire workflow to the screen. This is especially helpful before presenting your workflow to managers or auditors, giving them a complete overview in one glance.

4. The Workflow Editor: Your Main Canvas

The Workflow Editor is your digital workbench. It is the central canvas where financial data transformation comes to life through visual programming. Unlike Excel where logic hides inside cell formulas, the Workflow Editor displays every step of your analysis process as a connected flow of nodes, making your methodology transparent, auditable, and reproducible.

When you create or open a workflow, the Workflow Editor occupies the large central area of the KNIME interface. This blank canvas is where you'll drag nodes from the Node Repository, configure them for your specific financial tasks, and connect them to create automated data processing pipelines. The canvas provides an infinite workspace that automatically expands as you add more nodes, allowing you to build workflows of any size and complexity.

Figure 4.1 — Workflow Editor elements — node description panel, workflow canvas, port outputs, and node execution

Panning Across the Canvas

To move around your workflow, simply click and hold on any empty area of the canvas, then drag in any direction. This works just like moving a map around on your screen. Your mouse cursor changes to a hand icon when you're in panning mode, giving you visual feedback that you're moving the view rather than selecting nodes. This becomes particularly useful when building comprehensive financial processes that extend beyond your visible screen area.

Zooming In and Out

The Zoom Controls appear in the bottom-right corner of the Workflow Editor. Click the plus (+) button to zoom in for detailed work, or the minus (−) button to zoom out to see your entire workflow. You can also use your mouse scroll wheel to scroll up to zoom in, scroll down to zoom out. The "Fit to Screen" button (usually represented by a rectangle icon) automatically adjusts the zoom level so your entire workflow fits in the visible area.

For keyboard users, Ctrl+0 (Windows/Linux) or Cmd+0 (macOS) instantly fits your entire workflow to the screen. This is especially helpful before presenting your workflow to managers or auditors, giving them a complete overview in one glance.

4. The Workflow Editor: Your Main Canvas

The Workflow Editor is your digital workbench. It is the central canvas where financial data transformation comes to life through visual programming. Unlike Excel where logic hides inside cell formulas, the Workflow Editor displays every step of your analysis process as a connected flow of nodes, making your methodology transparent, auditable, and reproducible.

When you create or open a workflow, the Workflow Editor occupies the large central area of the KNIME interface. This blank canvas is where you'll drag nodes from the Node Repository, configure them for your specific financial tasks, and connect them to create automated data processing pipelines. The canvas provides an infinite workspace that automatically expands as you add more nodes, allowing you to build workflows of any size and complexity.

Figure 4.1 — Workflow Editor elements — node description panel, workflow canvas, port outputs, and node execution

Panning Across the Canvas

To move around your workflow, simply click and hold on any empty area of the canvas, then drag in any direction. This works just like moving a map around on your screen. Your mouse cursor changes to a hand icon when you're in panning mode, giving you visual feedback that you're moving the view rather than selecting nodes. This becomes particularly useful when building comprehensive financial processes that extend beyond your visible screen area.

Zooming In and Out

The Zoom Controls appear in the bottom-right corner of the Workflow Editor. Click the plus (+) button to zoom in for detailed work, or the minus (−) button to zoom out to see your entire workflow. You can also use your mouse scroll wheel to scroll up to zoom in, scroll down to zoom out. The "Fit to Screen" button (usually represented by a rectangle icon) automatically adjusts the zoom level so your entire workflow fits in the visible area.

For keyboard users, Ctrl+0 (Windows/Linux) or Cmd+0 (macOS) instantly fits your entire workflow to the screen. This is especially helpful before presenting your workflow to managers or auditors, giving them a complete overview in one glance.

5. All About Nodes

Selecting Nodes

Click any individual node to select it and you'll see it highlighted with a blue border and selection handles (small squares) at its corners. To select multiple nodes, click on empty canvas space and drag to create a selection rectangle. All nodes within or touching this rectangle become selected. Alternatively, hold Ctrl (Windows/Linux) or Cmd (macOS) while clicking individual nodes to add them to your selection one at a time.

Once multiple nodes are selected, you can drag them as a group to reorganize your workflow layout. The connections between nodes automatically adjust to maintain proper links as you move things around.

Understanding Node Anatomy and Structure

Every node in KNIME follows a consistent visual design that communicates its purpose, status, and connectivity at a glance.

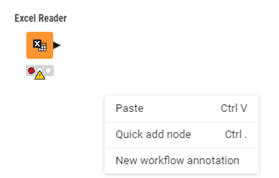

The Node Body

Figure 4.2 - Anatomy of a node

Each node appears as a coloured rectangular box. The node's name appears prominently at the top, with the node type (like "Excel Reader" or "Row Filter") displayed just below. The background color provides quick visual categorisation: purple/pink nodes typically handle data input and output operations, green nodes perform data manipulation and transformation, blue nodes create visualisations, and yellow nodes manage workflow control structures.

Status Indicators: Understanding the Traffic Lights

The small circular indicator in the bottom-right corner of each node tells you its current state through a simple colour system:

Figure 4.3 — Table of Different Traffic Light status

Sometimes a node shows green status (executed successfully) but with a small warning triangle overlay. This means the node completed its work but encountered non-critical issues. For example, it might have found null values or format inconsistencies that it handled by skipping rows.

Warnings don't stop execution but signal you should investigate. Check the Console panel for yellow warning messages explaining what happened.

The spinning gear or progress indicator appears while a node actively executes. For nodes processing large datasets, this might persist for several seconds or minutes. The progress indicator gives feedback that the node is working

Every node in a KNIME workflow has small connection points called ports. These ports determine what kind of information a node can receive and what it can pass on to the next step. They are the way data, models, database connections, and other objects move through your workflow.

Understanding node ports is essential, because the connections you create between ports define the entire flow of your analysis. Once you learn how to read these ports, you can tell at a glance what a node expects as input and what it will produce as output.

Input and Output Ports

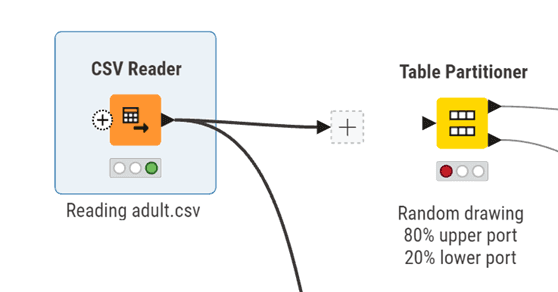

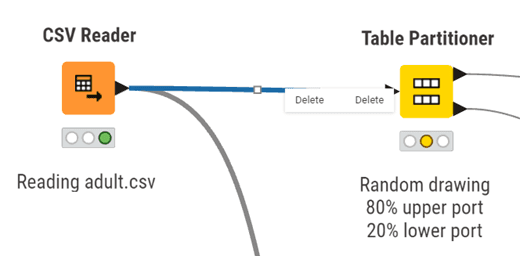

Ports are the small triangular connection points on the sides of nodes. They work like electrical sockets. You plug connections into them to create your data flow.

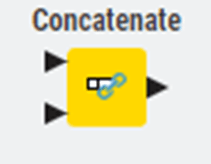

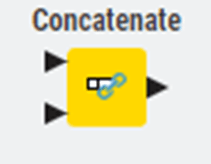

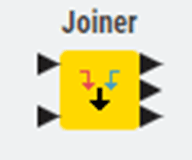

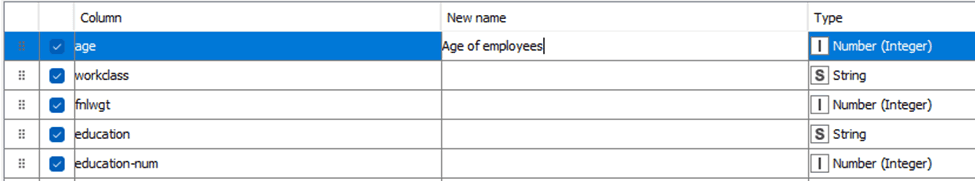

Input ports appear on the left side of nodes and receive data coming in. Most nodes have one input port, but some have multiple inputs for different purposes. For example, a Joiner node (used to merge two tables) has two input ports with one for each table you want to combine.

Output ports appear on the right side of nodes and send data forward to the next step. After a node processes your data, its output port makes the results available for the next node in the sequence. Some nodes have multiple output ports when they produce different types of results or split data in multiple directions.

The triangular shape of ports points in the direction data flows: input triangles point right (into the node), output triangles point left (out of the node).

Port Shape Conventions: Triangles vs. Squares

You may have noticed that some ports are triangular while others are square. This distinction carries meaning about how those ports handle their data.

Triangular Ports: Data Streams: Triangular ports represent data that flows like a stream from one node to the next. Black triangular ports carrying data tables are the most common example. When you connect triangles, the entire data table passes from the first node to the second, which processes it and produces output through its own output triangle. Triangular ports are active and flowing. Data continuously moves and transforms from left to right across your canvas.

Square Ports: Objects and Resources: Square ports represent objects or resources rather than flowing data streams. Database connections (red squares), models (brown squares), reports (blue squares), and images (green squares) are discrete objects that get passed as complete units rather than streamed and transformed. Think of triangular ports as conveyor belts moving items that get processed at each station, while square ports are like passing a complete tool or finished product. The tool or product is self-contained, not continuously modified.

This distinction helps you understand workflows at a glance. Chains of triangular ports show data transformation pipelines, while square ports indicate that complete objects are being passed around.

Port Multiplicity: Single vs. Multiple Connections

Single Input/Output: Most nodes have one input port and one output port, creating a simple chain where data flows in, gets processed, and flows out. A Row Filter has one black input (receives the table to filter) and one black output (sends the filtered table forward). This represents straightforward, linear transformation.

Multiple Inputs: Some nodes have multiple input ports because they need to combine or compare different data sources. The Joiner node has two input ports for merging tables. The Concatenate node can have multiple inputs to stack several tables together. When you see multiple input ports, think about what data sources that node needs to do its job. A node comparing budget to actuals needs two inputs: budget data and actual data.

Multiple Outputs: Some nodes produce multiple outputs. A Row Splitter has two output ports: one for rows that matched your criteria and one for rows that didn't match. This lets you route different subsets of data down different paths in your workflow. Multiple output ports give you branching capability. Data can flow down multiple paths simultaneously, each applying different transformations, then potentially coming back together later through a join or concatenate operation.

Optional Ports: Some input ports are optional, shown with a dashed triangle outline. The node can execute without these connections, but connecting them enables additional functionality. For example, many writer nodes have an optional red flow variable input at the top. Without it, the node writes to your manually configured file path. With it connected, the node uses a dynamic path, enabling automated file naming like "GL_Report_January_2025.xlsx" without monthly reconfiguration. Optional ports tell you: "This node works without this connection, but you can enhance it by connecting something here." As a beginner, you can typically ignore optional ports until you need their advanced functionality.

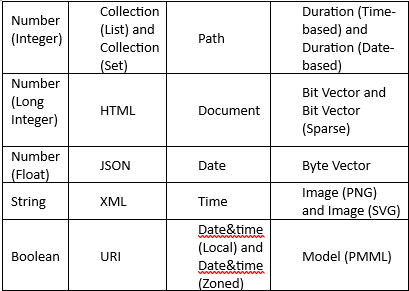

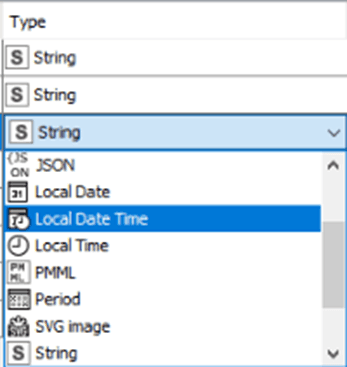

Port Colours and What They Mean

KNIME uses several port types to handle specialised data and operations. Understanding these variations helps you recognise what different nodes do and how they fit into more advanced workflows.

Figure 4.4 — Different Port Types

Data Table Ports (Black Triangle Ports): The node shown with black triangular ports on both sides represents the most common port type in KNIME. These black triangular ports carry data tables with rows and columns, similar to what you see in Excel spreadsheets or database tables. This is the port type you'll use most frequently in financial workflows. When you import general ledger data with Excel Reader, filter transactions with Row Filter, summarise amounts with Group By, or export results with Excel Writer, you're working with black data table ports throughout. The triangular shape points in the direction data flows: input triangles point right (into the node), output triangles point left (out of the node). Nearly every financial workflow consists primarily of nodes connected by black ports, forming a chain of data transformations from raw source data through calculations and filtering to final reports. Understanding black data table ports is fundamental because they handle all your actual financial data as it moves through the workflow.

Database Connection Ports (Red Square Ports): The node shown with red square ports represents a Database Connection node. Unlike triangular black ports that carry actual data tables, these square red ports carry an active connection to a database system like SQL Server, Oracle, or your company's ERP database. Think of this as the difference between having a phone number (the connection) versus having the actual conversation (the data). The Database Connection node establishes the link to your database but doesn't transfer any data yet. You then plug this connection into other database-aware nodes that use it to read or write data. For finance professionals, this is valuable when your financial data lives in databases rather than Excel files. Instead of exporting transactions to Excel first, you connect directly to the database and bring in only the data you need. This is faster, more secure, and keeps you working with current data.

Flow Variable Ports (Red Circle Ports): The node displaying red circular ports on both input and output sides represents Flow Variable operations. Flow variables are special parameters that control how your workflow behaves without carrying actual data tables. These ports always appear at the top of nodes, separate from the main data ports on the sides. Flow variables let you store and pass along values like file paths, date ranges, calculation thresholds, or any other settings that need to change dynamically. For example, instead of hard-coding "January 2025" in multiple places throughout your workflow, you could store "January 2025" as a flow variable at the beginning, then reference that variable throughout your workflow. When February arrives, you change the variable in one place and the entire workflow updates automatically. The red circular ports connect nodes that create, modify, or use these variables. While this is an advanced feature you won't need immediately, flow variables are what transform a single-use workflow into a reusable, automated process that runs month after month with minimal manual intervention.

Tree Ensemble Model Ports (Brown Square Ports) The node with brown square ports represents machine learning model operations, specifically Tree Ensemble Models. These are predictive models that analyse historical patterns to make predictions about future outcomes. In finance, you might use these for forecasting revenue, predicting delinquent accounts receivable, detecting potentially fraudulent transactions, or estimating project costs. The node that trains the model produces a brown output port carrying the trained model. You then connect this to a predictor node that applies the model to new data. While more advanced than basic reporting, these predictive capabilities can add significant value to financial planning and analysis as you grow comfortable with KNIME.

Report Ports (Blue Square Ports): The node showing blue square ports handles Report objects. KNIME's reporting capabilities let you create formatted, professional reports combining data tables, charts, text, and images. These reports can be exported as PDF, Word documents, or HTML files. Report ports carry the report object as it's being built. You might connect multiple data sources and visualisations into a report assembly node, which combines them into a single formatted document. The blue port output connects to a report writer that generates the final file. For finance professionals, this transforms KNIME from a data processing tool into a complete reporting solution. Your workflow can import data, perform calculations, create charts, assemble everything into a formatted report, and save or email it automatically.

Image Ports (Green Square Ports): The node with green square ports carries Image objects. These ports transport actual image files or graphics generated by KNIME nodes. When you create a chart or visualisation in KNIME, that visual output flows through green image ports. This is useful when embedding charts in reports, saving multiple visualisations to separate files, or combining several charts into a dashboard. For example, you might create a budget-versus-actuals bar chart, output it through a green image port, and connect that to a report node that includes the chart in your monthly variance report. Image ports give you control over how visualisations flow through your workflow and where they end up, whether embedded in reports, saved as standalone files, or combined into dashboards.

From our deployments: New users overthink port colours. In 90% of finance workflows, you'll only use black data ports. We typically don't introduce database or model ports until week two of training. Focus on the black triangles first.

5. All About Nodes

Selecting Nodes

Click any individual node to select it and you'll see it highlighted with a blue border and selection handles (small squares) at its corners. To select multiple nodes, click on empty canvas space and drag to create a selection rectangle. All nodes within or touching this rectangle become selected. Alternatively, hold Ctrl (Windows/Linux) or Cmd (macOS) while clicking individual nodes to add them to your selection one at a time.

Once multiple nodes are selected, you can drag them as a group to reorganize your workflow layout. The connections between nodes automatically adjust to maintain proper links as you move things around.

Understanding Node Anatomy and Structure

Every node in KNIME follows a consistent visual design that communicates its purpose, status, and connectivity at a glance.

The Node Body

Figure 4.2 - Anatomy of a node

Each node appears as a coloured rectangular box. The node's name appears prominently at the top, with the node type (like "Excel Reader" or "Row Filter") displayed just below. The background color provides quick visual categorisation: purple/pink nodes typically handle data input and output operations, green nodes perform data manipulation and transformation, blue nodes create visualisations, and yellow nodes manage workflow control structures.

Status Indicators: Understanding the Traffic Lights

The small circular indicator in the bottom-right corner of each node tells you its current state through a simple colour system:

Figure 4.3 — Table of Different Traffic Light status

Sometimes a node shows green status (executed successfully) but with a small warning triangle overlay. This means the node completed its work but encountered non-critical issues. For example, it might have found null values or format inconsistencies that it handled by skipping rows.

Warnings don't stop execution but signal you should investigate. Check the Console panel for yellow warning messages explaining what happened.

The spinning gear or progress indicator appears while a node actively executes. For nodes processing large datasets, this might persist for several seconds or minutes. The progress indicator gives feedback that the node is working

Every node in a KNIME workflow has small connection points called ports. These ports determine what kind of information a node can receive and what it can pass on to the next step. They are the way data, models, database connections, and other objects move through your workflow.

Understanding node ports is essential, because the connections you create between ports define the entire flow of your analysis. Once you learn how to read these ports, you can tell at a glance what a node expects as input and what it will produce as output.

Input and Output Ports

Ports are the small triangular connection points on the sides of nodes. They work like electrical sockets. You plug connections into them to create your data flow.

Input ports appear on the left side of nodes and receive data coming in. Most nodes have one input port, but some have multiple inputs for different purposes. For example, a Joiner node (used to merge two tables) has two input ports with one for each table you want to combine.

Output ports appear on the right side of nodes and send data forward to the next step. After a node processes your data, its output port makes the results available for the next node in the sequence. Some nodes have multiple output ports when they produce different types of results or split data in multiple directions.

The triangular shape of ports points in the direction data flows: input triangles point right (into the node), output triangles point left (out of the node).

Port Shape Conventions: Triangles vs. Squares

You may have noticed that some ports are triangular while others are square. This distinction carries meaning about how those ports handle their data.

Triangular Ports: Data Streams: Triangular ports represent data that flows like a stream from one node to the next. Black triangular ports carrying data tables are the most common example. When you connect triangles, the entire data table passes from the first node to the second, which processes it and produces output through its own output triangle. Triangular ports are active and flowing. Data continuously moves and transforms from left to right across your canvas.

Square Ports: Objects and Resources: Square ports represent objects or resources rather than flowing data streams. Database connections (red squares), models (brown squares), reports (blue squares), and images (green squares) are discrete objects that get passed as complete units rather than streamed and transformed. Think of triangular ports as conveyor belts moving items that get processed at each station, while square ports are like passing a complete tool or finished product. The tool or product is self-contained, not continuously modified.